Smudging the invisible ink – image steganography disruption with a focus on cover image degradation minimisation

Author: Connor Morley (Principal Malware Security Researcher)

Summary

Steganography, the art of hiding data within other data, is a long standing security issue which is constantly evolving in both academic circles and with malicious architects. Image Steganography (IS) is a branch of steganography which specifically targets formats commonly used in almost all platforms for image rendering and storage, making IS use a widespread problem and very difficult to defend against without adverse effects. Common methods for mitigating IS typically effect the original image in a way that degrades it significantly or potentially allows the recreation of the hidden channel.

Digital IS is often employed for malicious communications to achieve objectives such as exfiltration of sensitive information, IP theft, espionage and even infiltration of malicious elements (TA558 – April 2024). IS is a concern which has been constantly addressed by the cybersecurity industry over the years with various mitigation and detection strategies devised. However, the balance between mitigation and usability is something which routinely leads to a compromise which typically does not satisfy either concern completely. Understanding the ways IS can operate as well as the mitigation strategies available and their disruptive impact can be important to developing any potential solution to be universally applied.

This paper is split into three sections; the first will aim to explain what exactly IS is, how it operates and some of the variants of IS that are commonly used in current systems. The second section will explain the research we conducted to try and effect high level of mitigation with a core focus on minimizing any degradation to the original image with statistical analysis and targeted solutions explored in detail. The final section will elaborate on solutions to this issue, the statistical fidelity of its use and how we have paired this with CDR technology to affect a domain-wide mitigation strategy to IS.

Image steganography’s inner workings

How do images work in digital space?

IS can target the image components of formats that support image data such as PNG, BMP and JPEG. Steganography can be applied to almost any data structure such as text, audio, video and even program code itself. With IS the focus is solely on the components which comprise the visual representation of the subject and disregards all other elements such as metadata.

First, it’s important that we understand how images are displayed to a human user on any display device. Displays are made up of pixels which are made up, in most modern monitors, of subpixels made of LED's which control the red, green, and blue light emitted. The most common colour depth is 1 byte (8 bits) meaning that each colour has an intensity range of 0 – 255 with 255 meaning full intensity. Via colour theory, with these three base colours we can alter the intensity of the subpixels individually to cause a blend of the light emitted to create any colour we desire.

If you have an image which has the dimensions 2,544 x 3,392, that image is comprised of 8,629,248 pixels. Let’s assume the image is using standard RGB channel encoding, this means that the image data takes up 8,629,248 * 3 = 25,887,744 Bytes or 25.89MB in raw pixel data. It’s important to know that image formats do not store images in the raw pixel data typically, instead, they compress them in some manner to make them easier to transmit and store. The ways in which images are formatted can have a direct impact on the IS methods which are available to that format which is something we will cover later.

What is the Human Visual System (HVS)?

The HVS can refer to the amalgamation of components which make up the visual processing that occurs within humans. HVS can be determined by an individual’s capabilities in processing visual information. This considers factors such as light perception (cones and rods) in the eye, the optical nerve bandwidth, and the cognitive processing speed of an individual as well as several other factors. As such, HVS can refer to the capacity for a person to perceive an image and its details.

The HVS has some known limitations which are actively targeted when steganography methods are devised. Three primary flaws in the HVS are poor perception of colour differences; poor perception of image edge abnormalities and finally poor perception of fine detail degradation.

HVS can depend on visual capacity and can be used to determine whether alterations in images are perceptible. Using HVS may, in some cases, result in different statistical conclusions and some limited deviation can occur.

Least Significant Bit (LSB) steganography – A basic version of IS

One of the simplest methods to achieve IS, LSB steganography operates by manipulating the LSBs of colour channels in an image to hide the data stream. Raw pixel data is made up of the red, green a blue channel which are each 1 byte corresponding to a value between 0 and 255. So, for the shade of green below the values are: Red = 50, Green = 168, Blue =52:

Image 1 – Colour RGB (50, 168, 52)

This means that a pixel with this colour has the raw pixel channel values in binary of:

| Color | Binary Value |

|---|---|

| Red | 0011 0010 |

| Green | 1010 1000 |

| Blue | 0011 0100 |

Table 1 – RGB colour channel values in binary for green (Image 1)

Now let’s say I want to hide the letter “A” into the image. “A” in ASCII has a hex value of 0x41 or in binary:

"A" | 0100 0001 |

Table 2 – ASCII letter “A” in binary format

LSB steganography will take the binary value of “A” and encode this into LSBs in the colour channels of the pixels in the image. LSBs of a binary value are those on the right-hand side. If we do some data mathematics, we can determine the maximum capacity of an LSB steganography data stream in an RGB image in bytes with the following formula:

Equation 1 – LSB hidden data capacity in bytes

Therefore, if an image had a pixel area of 42 x 42 pixel it would have the hidden data capacity of 661 bytes.

So, if the letter “A” has a binary value which is 1 byte or 8 bits long, we will need to encode at least 3 pixels as each pixel has a capacity of 3 bits we can encode. So, what does that look like with two new colours added with RGB values “98, 78, 01” and “86, 12, 198”:

Image 2 – Colour RGB (50,168,52), (98, 78, 01), (86, 12, 198)

Now we have a binary sequence of:

| Pixel 1 | Pixel 2 | Pixel 3 | |

|---|---|---|---|

| Red | 0011 0010 | 0110 0010 | 0101 0110 |

| Green | 1010 1000 | 0100 1110 | 0000 1100 |

| Blue | 0011 0100 | 0000 0001 | 1100 0110 |

Table 3 – RGB channel binary values making up pixels in image 2

When we use LSB steganography to inject the letter “A” into the pixel data the result is a manipulation of the far right value of each byte for each channel in order. This results in the following changes:

| Pixel 1 | Pixel 2 | Pixel 3 | |

|---|---|---|---|

| Red | 0011 0010 | 0110 0010 | 0101 0110 |

| Green | 1010 1001 | 0100 1110 | 0000 1101 |

| Blue | 0011 0100 | 0000 0000 | 1100 0110 |

Table 4 – LSB encoding results of letter “A” into RGB channels of image 2

This shows that the LSB values (highlighted in blue) between the two have changed:

- Original = 000 001 000

- Stegged = 010 000 010

From this, we can see the first 8 LSB is now “0100 0001” which we confirmed earlier corresponds to ASCII character “A”. But as we have changed the colour intensity values the pixels will be different, this difference appears as:

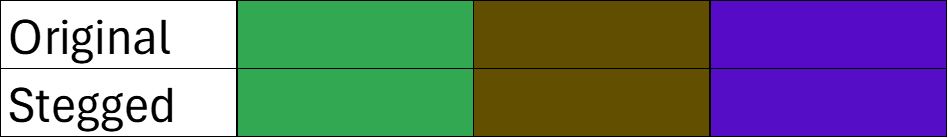

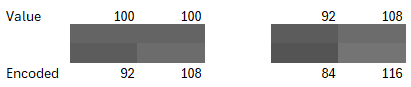

Image 3 – Contrast between original and altered pixel colours.

From the perspective of the HVS the two colours are identical, this is because the minor variations are imperceptible to the human eye. A hidden message, data blob or even payload can be encoded by using the same process for each hidden character with each character being encoded into the LSB of each pixel’s channel in sequence. LSB steganography does not employ any complex choice in which pixels to embed data into, and instead does so in a linear sequence.

One way the capacity of this technique could be expanded is to manipulate values other than the singular LSB but rather by using LSB 1 and 2 as well. LSB 1 corresponds to the penultimate bit value, LSB 2 the proceeding bit and so on. However, modification of the colour channel values further away from LSB 0 has a much greater effect on the generated colour and incurs a greater risk of visual detection by the human user. As such modification of any bit other than LSB 0 is typically used only in more advanced techniques which account for where in the image the pixel being altered exists relative to the pixel values around it.

Alpha Channel Steg – LSB + 1

If an image is using the alpha channel and as such using the colour space RGBA, pixels have an additional channel assigned to them. The alpha channel is used to determine the opacity level of the pixel, effectively meaning how transparent the pixel should be. This value is required for composite images where multiple images are overlayed, the alpha value determines how the two colour values of pixels from two different images should interact.

In relation to steganography, the addition of the alpha channel which is also given in most cases a 1 byte depth expands the LSB capacity per pixel from 3 to 4. This means that in the prior example instead of requiring a minimum of 3 pixels to encode a single ASCII character, we now only require 2. This changes the formula to the following:

Equation 2 – LSB + Alpha hidden data capacity in bytes

If we use our prior example of an image with pixel area of 42 x 42 instead of having 611 bytes of hidden data capacity we now get 882 bytes.

Palette based steganography – Colour Chart Covert Comms

Palette based images are normally used for extremely simple images such as logos or swatches. This is because palette based images have a pre-set index of colours which are used to generate the image, we call this the palette. The majority of use cases for palette based images are formatted as palette images to reduce the memory capacity needed for an image to make it easier to embed and transmit. The colour palette size is typically 256 which allows for a single byte to be used as the index ID for each entry. Each pixel in the image then stores a single byte index reference which determines the stored colour in the palette which is to be used for that pixel.

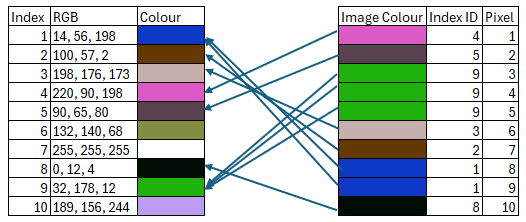

This looks something like this:

Image 4 – Palette based image pixel and index relationship representation.

Some formats can support palettes of reduced sizes such as 2, 4, or 16, however it is rare to see a palette size other than 256. Regardless of the palette size, the relation between pixel and palette remains the same. The only other factor to consider is the colour depth supported in the palette, again this is typically 8 bits however some can store 16, 24 or 32 bit colour values. The use of such high colour depth values in a palette can itself be considered anomalous in most cases, however, its presence does not alter the way in which the palette image is processed only the potential data size which can be used to hide data.

IS for palette based images typically does not rely upon the encoding of the colour channels as with other methods. This is due to the substantially limited range of colours that are present and the likely visual impact such modifications would have on the resulting image. To calculate the maximum capacity of a palette based image with a palette of the maximum size 256 using RGB we use the equation:

Equation 3 – Palette based hidden data capacity in bytes using LSB.

With the palette size at 256 the maximum capacity is an extremely limited 96 bytes using LSB. As the palette is limited, modification of LSB values other than LSB 0 are very likely to cause visual discrepancies which are noticeable to a human observer and as such are typically not used in such cases. However, hiding data in palette based images is still possible by instead manipulating the index order itself.

Let’s look at two examples of the ways palette based steganography can work.

Palette Index Reordering (PIR)

PIR is a primary version of the palette based steganography. There are several ways this IS method can work, all of which require the palette index to be ordered into a specific pattern where the pattern contains the Hidden Data Stream (HDS). One theoretical technique works by converting a message into binary and then converting that into an index sequence relative to the original index of an image. Each modification of the index, such as colour in index X now being in index Y, represents either a 0 or a 1. The new index sequence is then applied to the original image palette creating a new permutation of the image palette which when received can be converted back into the binary sequence via the appropriate schema which makes up the hidden message.

As a basic example let’s use a palette of 16 colours, for this method of steganography we are not concerned with the colour values themselves just their index value. When running the message “hello” through our encoder it generates the permutation “7, 4, F, 8, D, 9, 2, A, 1, E, 3, B, 5, 6, C“. When applied to the original palette the following occurs:

Image 5 – PIR encoding representation.

In the encoded image demonstrated in the colours in the bottom row, the palette index 1 stores the colour data originally stored in index 7, 2 stores value 4 and so on. The alterations in the palette sequencing represent 1’s and 0’s relative to the encoding schema as such by decoding the image using the same schema you would generate a binary sequence representing the string “hello”.

One of the most effective methods of palette modifications rather than modification of the colour channels themselves is that there is no visual impact on the image in most cases. Using the example above, the pixel which was original pointed at palette index 1 will now be pointing at palette index 9, however in the encoded image the modified index contains the exact same colour channel information. As such, the rendered image pixels display the exact same pattern.

Let’s think of a practical use case, imagine two people email each other and their emails contain a corporate logo as part of the formatting which is a palette image. The sender and recipient are in possession of the original image used for the logo as well as an encoding schema/tool to generate and decode permutations of a 256 range index. The users would be capable of making covert communications as a sub-channel in those emails without any observed visual deviation.

The capacity of the PIR techniques is directly related to the number of unique permutations possible and then finding the logarithm base 2 of that limit. The equation for a full index PIR is:

Equation 4 – PIR hidden data capacity for 256 palettes using entire available sequences

This results in a theoretical maximum capacity relative to the maximum number of permutations translated into either 1 or 0 to be 1684 bits or 210 bytes. This in practice would be unlikely due to the complexity in the encoding schema required, it is more likely that the use of segment encoding would be used as this reduces the complexity of the encoder but also the capacity of the HDS.

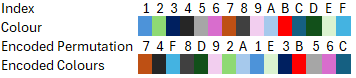

EZ steg

One of the most popular methods of palette based IS and used as a basis for other techniques, EZ steg devised by Machado in 1997 works on the principle of first reordering the palette based on colour luminance values. Luminance is the “brightness” of a pixel relative to its colour based on the way humans perceive certain colours to be brighter than others. Colours with a close luminance value are normally also close to each other in the colour space. Luminance is typically calculated in digital images to the perceived luminance with the following formula:

Equation 5 – Perceived luminance

The HDS is then broken down into its binary components and encoded into each pixel’s palette index reference. As the colours adjacent to each other in the palette should now be very similar, based on their luminance value, the LSB of the index reference is modified when required by increasing or decreasing its value by 1 to reflect the current bit of the data stream being encoded.

The luminance reordering in a tiny subset of palette entries results in the following changes:

Image 6 – EX colour luminance re-ordering

From this we can see that many colours that we perceived to be very closely matches to each other are now grouped together. However, this is not infallible as is demonstrated between the index entries with luminance values 68.151 to 76.536 where shades of brown and grey are sequences next to each other. This is one of the primary limitations with EZ steganography which can lead to significant discrepancies between the original and altered image.

When the palette is re-ordered the pixel references are also updated to match the new locations of the original colour pointed to by the pixel. EZ steg then uses the index references LSB to switch between a 1 or 0 as required altering the index pointed to by the pixel by 1 as required either up or down the palette. In the EZ colour palette, the index ID of 8 has the binary value “0000 1000” with the LSB being 0. If the first pixel is pointed at index 8 but the first bit of the hidden message is a 1, the pixels index reference would be potentially increased by 1 to “0000 1001” resulting in the pixel now using the colour stored in palette index 9. In this case, the two colours are very visually similar and so the alteration is unlikely to be detected.

However, as mentioned the visual difference between example index 4 and 5 with luminance scores 68.151 and 70.494 respectively is significant. Although the luminance order is correct, switching the LSB of index 4 which is “0000 0100” to a 1 “0000 0101” would result in the pixel now pointing to 5 and displaying an easily detected discrepancy.

In this example, we are using a tiny sample set of 16 colours whereas in real cases there would be up to 256 colours ordered. Despite this consideration, it is not uncommon when using EZ steganography for these jumps to significantly different colours to occur accidentally as part of the encoding process.

Discrete Cosine Transformation Steganography (DCT)

This method of steganography only works from image formats which are stored in the frequency domain, such as JPEG. When a JPEG image is written to disk, it is stored as a JPEG File Interchange Format (JFIF) file, which contains the frequency information for the associated image. When opened/rendered, the frequency information in the JFIF is decompressed using the JPEG decompression method which translates those frequencies into pixel values moving the image from the frequency domain to the spatial domain.

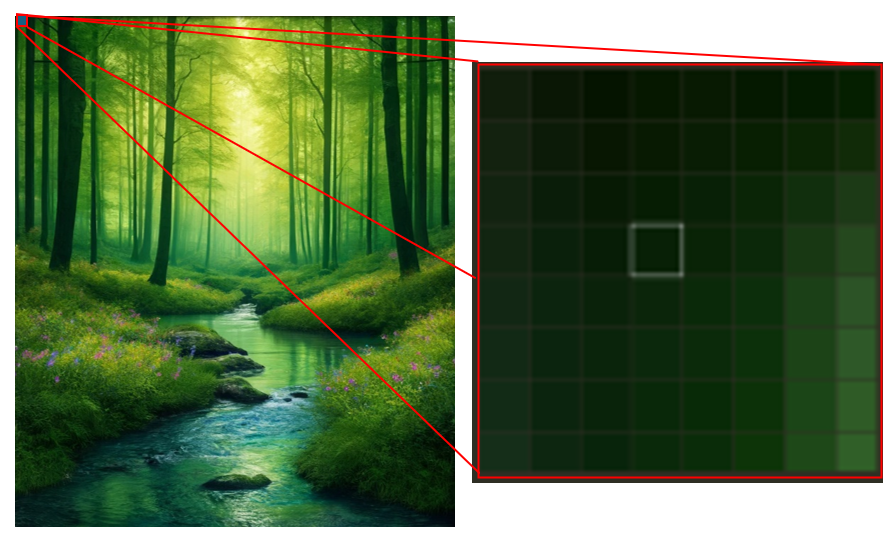

Image 7 – JPEG image of woodland, AI generated. Pixel grid 8x8 from location (0,0).

Image 7 – JPEG image of woodland, AI generated. Pixel grid 8x8 from location (0,0).

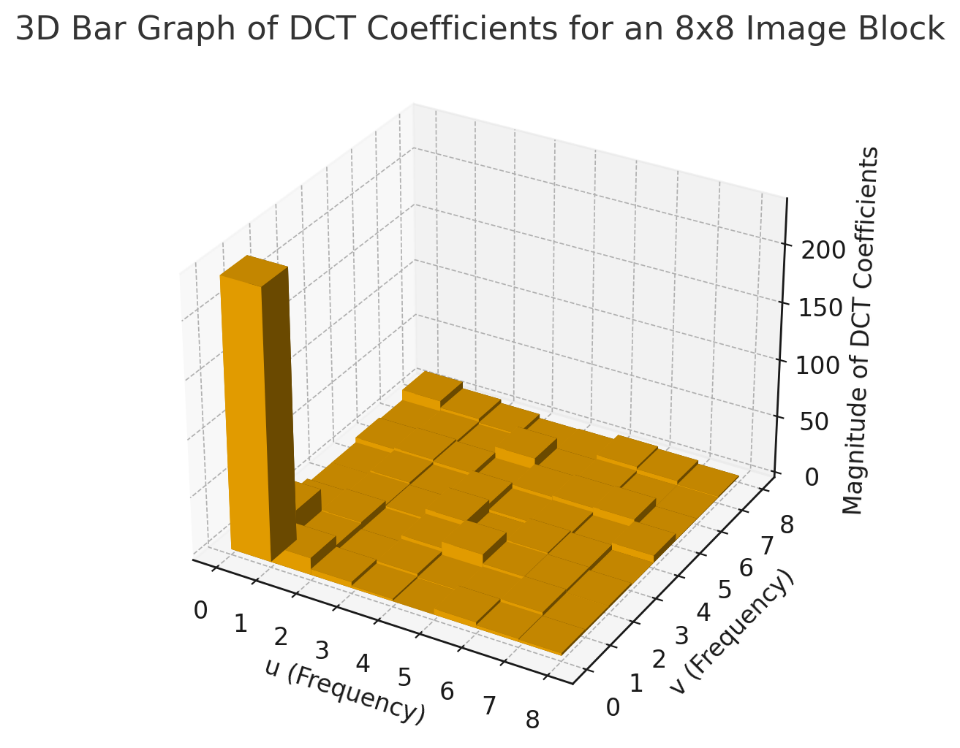

Image 8 – Frequency cosine representation of 8x8 pixel grid at location (0, 0) from Image 7

DCT steganography works by instead of altering the pixel data in the spatial domain, it encodes the HDS into the coefficients in the frequency domain. Changes to the coefficients result in multiple pixels being affected but in very subtle ways if the modifications made are minor (typically to the LSB value) and typically limited to the higher frequencies. The higher frequencies are selected as the HVS has a lower perception to alterations in these ranges relative to lower frequencies as they affect finer details.

This means that DCT steganography allows for the same capacity, if not more, than that of LSB steganography, depending on the tolerance of alteration permitted. DCT steganography results in a distributed deviation in the resulting image rather than specific and significant alterations which occur when spatial modifications are made. As specific alterations may be more easily detected by the HVS when adjacent pixels have sudden significant deviations, this has a greater chance of going undetected by an end user.

Assuming the sender and recipient use the same DCT steganography system, they may or may not use a key to determine which coefficients are modified or use a standard determination. As the JFIF format is already compressed it is less likely to be affected by transmission compression making it slightly more robust than other methods.

Pixel Value Differencing Steganography (PVDS)

PVDS is a method of IS which does not directly encode the HDS into the binary values of an image but instead applies a mathematical process to hide the data stream. Whereas in the prior examples the hidden data is stored typically in the LSB, PVDS aims to make modifications to the LSB of pixels but not so that they correspond to the hidden data sequence. Instead, PVDS will calculate the difference between the values of colour channels in pixels adjacent to each other and manipulate this difference to encode the data.

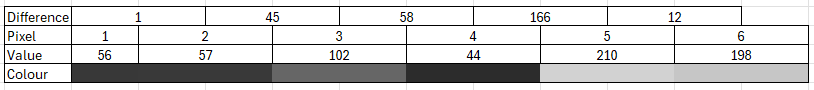

Using a greyscale image with a colour range of 0 to 255 as an example we can easily see the calculated differences between the pixels.

Image 9 – Pixel value differences in greyscale

Whereas with LSB steganography we would be attempting to encode the HDS into the value of the pixel, with PVDS we instead alter the difference by adjusting the difference between the two adjacent pixels. This is achieved by adding or subtracting to the pixels to achieve the desired distance.

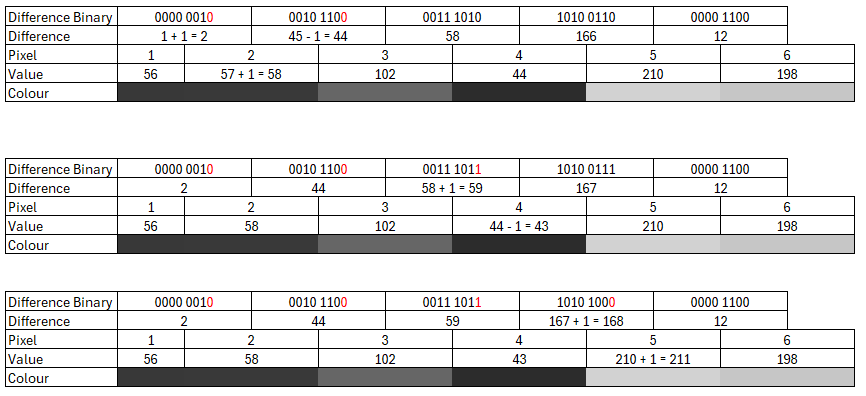

As an example, let’s assume we have the symbol “)” as a binary sequence “0010 1001” and we want to insert this into the sequence above in the LSB 0 position of the difference values between pixels. The difference between pixel 1 and pixel 2 is “1” which has an LSB of “1” whereas the first binary value of “)” is 0. To change this, we adjust the values of pixel 1 and 2 in order to increase or decrease the difference value so that its LSB is 0. In this case we can increase the value of pixel 2 by 1 which results in a difference of 2 which does have an LSB of 0. Subsequently, the difference between pixel 2 and 3 is altered as we have changed the value of pixel 2 which is changed from 45 to 44.

The next binary value of “)” is another 0 which 45 has as an LSB, so by changing the value of pixel 2 we have effectively encoded 2 bits of the HDS “)” binary sequence already. The process is continued with the alterations typically being made to exacerbate the difference that already exists rather than reduce it. However, calculations are normally made within the encoder to determine the minimum alteration required to encode the desired bit sequence rather than strictly exacerbating or dealing with pixel values already at their threshold.

Image 10 – PVDS encoding example for symbol “)” first four bits as PVDS LSB 0

This example of PVDS is extremely simple due to its limited bit choices and linear application, whereas in real use-cases far more complex strategies are likely to be employed. They aim to maximise the HDS whilst maintaining minimal visual disruptions by taking advantage of the HVS weakness to modification to pre-existing colour difference. It is important to note that PVDS typically will not work only on LSB 0 but may work up to LSB 3 if the tolerance is deemed high enough.

To do this, three common strategies are employed, either a difference threshold for linear sequencing, a range assignment is determined for data segment embedding or a scan for edge pixels is used.

A threshold, let’s call it K, is the definition of the minimum difference that must exist between two pixels before information is permitted to be embedded. The value of K is designed to identify pairs of pixels where the difference between them is significant enough that it can be modified without obvious visual effect and that the difference is large enough that it can contain a minimum data segment without obvious visual disruptions. If you had 2 pixels beside each other that had the same or very closely matching values, and the encoder required an LSB modification of LSB 0 to 3 which has a maximum effect of 15, the visual impact would be identifiable by a human viewer. However, if the difference between the two pixels was K or higher it is assumed that further modifications would be imperceptible to a human viewer.

In the example below, comparing the pixels in vertical order, it is considered that the sample pixels on the left are easy to distinguish between the original and encoded values. However, the example pixels on the right are harder for the human eye to distinguish between.

Image 11 – Difference threshold example

Range assignment works similarly to the difference threshold but instead breaks ranges down into capacity determinations. This is a specification that if a difference between two pixels is between X and Y, then it can only support the encoding of Z bits from the HDS. The ranges can look something like:

| Difference | LSB range available | Binary |

|---|---|---|

| 0-16 | N/A | 0000 |

| 17-62 | 0 | 0001 |

| 63-126 | 0-1 | 0011 |

| 127-184 | 0-2 | 0111 |

| 186-255 | 0-3 | 1111 |

Table 5 – PVDS ranges example

This means that the encoding of the HDS is still conducted in a linear fashion against the pixels, but they are dynamically adjusted depending on their original distance. As from the previous example this ensures that only minor changes can be made to pixels with proximity in the colour space, whilst those with a great divide can be modified more significantly. As the more significant changes only occur within pixels with greater distance between them already, the effect is not easily detected by the human eye. Tables can also be modified so that specific difference ranges or specific LSB depths are avoided, such as LSB 0. This adds more complexity as isolated ranges may interact with LSB 1 and 3 and the next range only interacting with LSB 2. This can make HDS distribution and, as a result decoding and detection, more complex.

The final method used is a scan of an image to determine edge pixels, this is the most complex method of PVDS but also the most effective. Whereas the prior two methods can be used in a linear fashion against all pixels in an image, edge scanning instead identifies pixels in an image which make up the edges between two distinct colour spaces before performing encoding. This is done because another weakness of the HVS is it distinction of variations in edges. Significant fluctuations in the edges of objects in images are “ignored” by the HVS which introduces a high capacity pixel modification space to be exploited. Alterations against edges can also be controlled using a threshold range table like the one above. This would be done in order to dynamically adjust the HDS embedding depth in the edges detected making it even harder to detect and decode without the original range table.

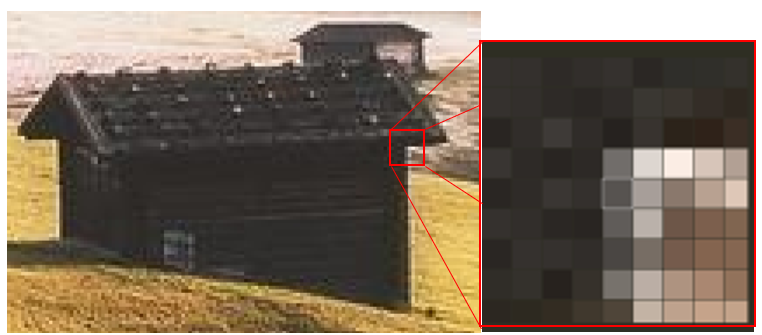

Using a kernel/grid of 1x2 or 2x2, the encoding program will scan the image for pixels that exceed a relatively high threshold in a specific pattern which typical identifies an edge. In the image below you can see an example of what the scan would determine to be an edge, which in this case is the edge of a wall of a hut. The stark colour difference between the adjacent pixels allows for maximum encoding capacity with a very low probability of being observable by a human viewer.

Image 12 - Pixel edge example

Spread Spectrum Image Steganography (SSIS)

Spread Spectrum Image Steganography (SSIS) is a complex method and works by effectively leveraging expected noise in an image to encode the HDS. See US6557103B1.

SSIS works by modulating a narrow band signal, which is the HDS, and spreading it across the spectrum of the cover image. This is done so that the modification in the image replicates the noise expected in photoelectronic images such as background radiation, UV spikes, sun flares etc. In practice this means taking the binary sequence of the HDS and spreading and modulating this via the use of a Pseudo Random Noise Generator (PRNG) sequence into an image. This can be done in the frequence or spatial domain, for this example we will explain in the spatial domain.

A key is provided which the sender and receiver know which is used with the PRNG to generate a specific encoding and subsequent decoding sequence. This sequence determines the pixels which are to be modified (the spreading) and to what degree each individual pixel should be modified (the modulation). In the spatial domain this means altering the binary values of the colour channels of the pixels to encode to HDS, however, the modulation sequence means that each selected pixel will be altered in a different way. This means that unlike other methods moving up from LSB 0, SSIS allows modification of specific bits in the channel independently. Despite this, a range limit from the LSB is normally maintained to prevent significant and therefore detectable modifications.

The encoded image should be imperceptibly altered when compared to the original regarding the HVS as well as steganalysis. As the alterations aim to replicate expected noise, steganalysis should determine the changes (without access to the original image) as typical spectral fluctuations rather than patternable or detectable modifications. As both the sender and recipient have the PRNG key, the receiver can generate the required modulation and spreading sequence to identify which pixels have been altered and in what way. As an external observer it would be nearly impossible to identify this form of encoding without access to the original image or the PRNG key.

Investigation into steganography mitigation

After identifying commonly utilised steganography methods and determining how they operate we began our investigation into what mechanisms could be used to mitigate them. The two core objectives of this research were first to find methods which could effectively and consistently corrupt the HDS in stegged images and second to minimize the visual degradation such methods result in. As mentioned previously, this balance between these two objectives can result in a compromise as it is often difficult to mitigate steganography without altering the image in some way. In some aspects, a key can be to make the alterations as imperceptible to the HVS as the original steganographic encoding method.

We considered adding steganalysis to our mitigation mechanism to only make alterations on images where steganography was likely to occur. However, steganalysis is resource intensive and is not infallible with the primary goal of some steganography techniques being to specifically evade such analysis and detection. Instead, we conducted our investigation under the assumption that the mitigation methods identified would be applied to all images processed without exception. This meant that we would have to assume that image modification and subsequent degradation would always occur if the mitigation method were appropriate to the image format being processed.

To measure the effect the proposed mitigation methods would have, we decided to employ multiple statistical values to accurately track deviations occurring between the original and processed image. These are:

- Mean Absolute Error (MAE) – Used to quantify the difference between two images based on their pixel values. This measurement accounts for all deviations/errors encountered between the two images.

Equation 6 – MAE equation

- Mean Square Error (MSE) – Used to quantify the difference between two images based on their pixel values. The deviation/errors are squared resulting in a greater sensitivity to larger deviations and a squashing of minor deviations. This measurement is tailored for significant alteration detection.

Equation 7 – MSE equation for greyscale image [1]

- Peak Signal to Noise Ratio (PSNR) – Defines the ratio between the maximum possible power of signals in an image against introduced noise (errors) typically measures as MSE.

Equation 8 – PSNR equation

- Structural Similarity Index Measurement (SSIM) – A model metric which perceived modification as structural changes in the image. Considers additional aspects other than absolute errors such as luminance.

Equation 9 – SSIM equation

By using these statistics, we were able to measure between strict pixel deviations and automated perception deviations. Using HVS as a basis, we began by modifying images using various filters and effects with varying intensities. For each prcoess we calculated the statistical deviation and from this established an HVS baseline for each metric, indicating a breach of this baseline was likely to be perceived by the HVS. This is subjective to the researcher’s visual acuity and individual HVS will vary considerably, however as with all experimentation the available resources introduce limitations.

The baselines determined are as follows:

- MAE = Below 0.01

- Palette based images = Below 0.015

- MSE = Below 0.0025

- PSNR = Above 0.4

- Palette based images = Above 0.35

- SSIM = Above 0.9

The first two metrics increase between 0 and 1 the more errors/deviations are detected between the two images with 0 meaning identical and 1 meaning complete corruption. The latter two metrics decrease from 1 to 0 as more deviations are detected with 1 meaning identical and 0 meaning complete corruption.

Palette based images introduced deviations in the statistics observed which needed to be accounted for. These deviations can be caused due to the limited data set/colour set which can be affected during processing. As the set was significantly smaller and the images typically simpler it was found that slightly higher statistical deviations were required to achieve HVS detection. This is reflected in the modified baselines for both MAE and PSNR.

When determining mitigation success encoding and decoding tools were required for each tested IS technique. During experimentation, each mitigation technique would be tested against compatible image formats from the test set. Each supported in the test set would have a key phrase encoded into them using the corresponding steganography tool. The original image was tested after encoding to ensure that decoding/extraction of the key phrase was successful. After mitigation experimentation, the generated image would have the same decode/extraction operation conducted against it to determine if the output generated the key phrase originally encoded. If the phrase was a match the mitigation was considered a failure, if the content was corrupted the mitigation was considered a success.

The test set used is made up of different image complexities, such as clear blue skies, scenery, and a human portrait, translated into multiple formats and colour profiles. For each mitigation method only the supported image formats were tested. In the following, image names are appended with either “P” or “TC” referencing whether they are palette or TrueColour image formats respectively. This resulted in a total test set of 42 images. All statistics documented here are averaged results on the statistical deviation observed across the test set for each specific format. This provides an overall determination of mitigations impact against varying file types with a range of image complexities considered.

Palette shuffling – Mitigating palette based techniques all at once

Palette based IS typically revolves around the technical art of reordering the palette to encode information. This, as specified in chapter 1, can be due to the colour depth available to such images being restricted creating an extremely low HDS bandwidth. As palette IS instead can require the palette to be sequenced to allow pixel index references to contain the HDS, the weak point of these methods can be the expected palette index sequence.

Palette shuffling involves taking the colour stored in one index and randomly allocating it to another index in the palette. Imagine you have red stored in index Y and blue stored in index X. Palette shuffling will move the colour red to be hosted in index X and blue instead will be moved to index Z. All pixels pointing to index Y are altered to now point to index X where the colour has been moved to. This results in the palette being completely reordered but the rendered image being unaffected as the pixels still relate to the same colours.

By randomising the colour locations, the index based encoding is corrupted and the HDS is broken. However, this mitigation can be reverted if the ordering follows a specific schema such as luminance values in EZ steg. As the recipient could reorder the palette themselves to achieve the same sequence the randomisation can be reverted. This is why although palette shuffling does disrupt index encoding in the transmitted image, it can be important that it is paired with another mitigation method which will affect the colour values themselves. Slight alterations in the colour values can severely disrupt the calculations used to achieve these specific sequences and as such is required to prevent the randomisation from being reverted.

LSB stomping

LSB stomping (which can also be referred to herein as “modified LSB” or “least significant bit modification”) can be used against the steganography method outlined earlier in this paper. LSB stomping can be a mechanism for mitigation, achieved by changing the LSB’s in channels in pixels to either a 0 or a 1. Considering many steganography methods rely on encoding data in some way into the LSB, least significant bit modification can be an effective method of mitigation.

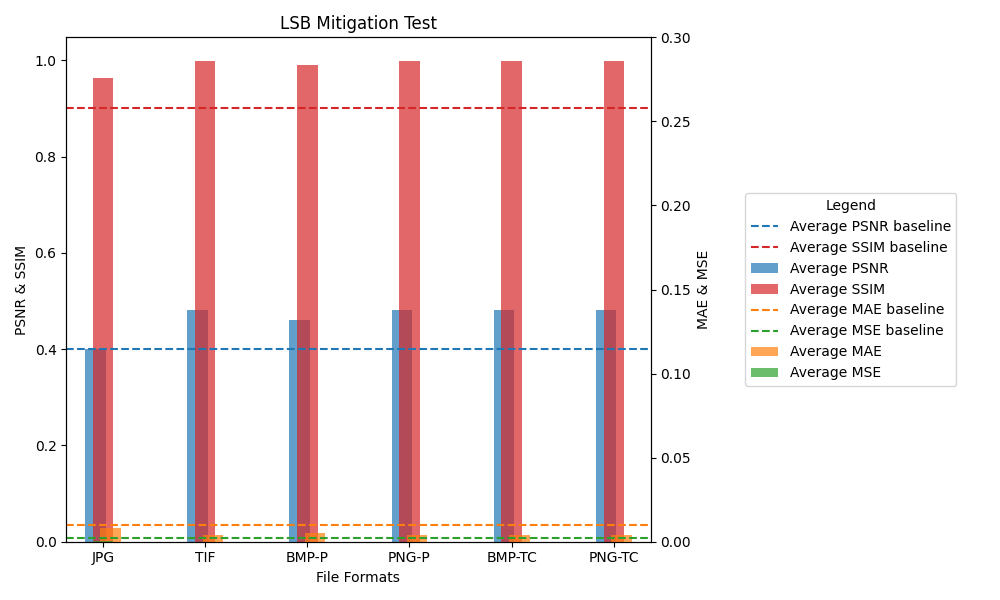

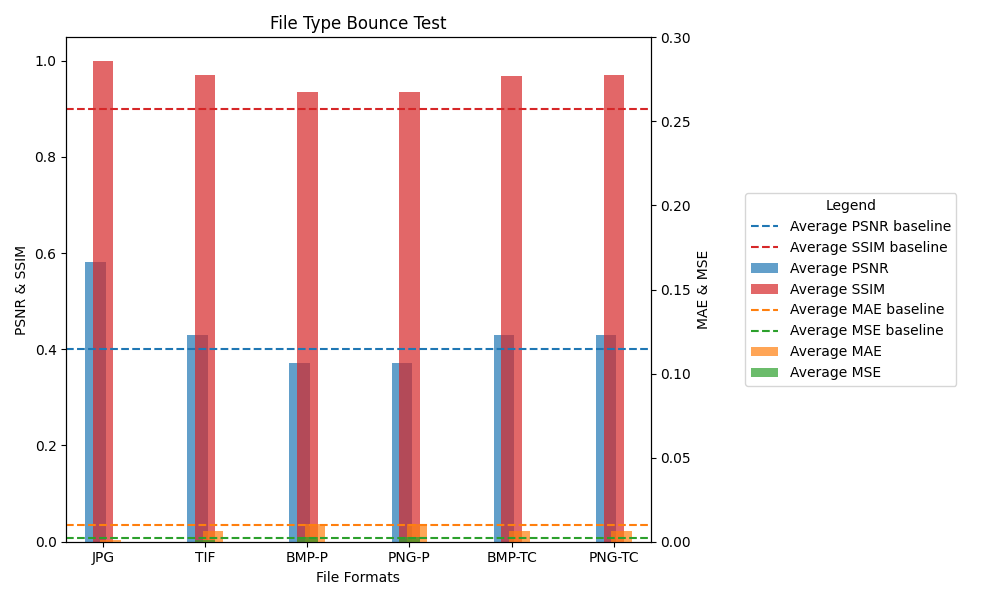

This mitigation method was tested against all 7 images in 6 formats consisting of a total of 42 images:

| LSB | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.400437143 | 0.008164286 | 0.000292857 | 0.964072857 |

| TIF | 0.481410714 | 0.003907143 | 0.00001 | 0.997832857 |

| BMP-P | 0.461514286 | 0.00527 | 6.28571E-05 | 0.990738714 |

| BMP-TC | 0.481408571 | 0.003907143 | 0.00001 | 0.997832857 |

| PNG-P | 0.481489143 | 0.003901429 | 0.00001 | 0.997822857 |

| PNG-TP | 0.4814 | 0.003907143 | 0.00001 | 0.997822857 |

Table 6 – LSB stomping statistics table

Image 13 – LSB stomping statistics graph

Across LSB, PVDS, DCT and SSIS IS methods this achieved full mitigation in all test cases where LSB 0 was included as part of the encoding range. These results demonstrate that this mitigation method yields statistical deviations that do not breach the baselines established for the HVS. The JPG image can come close regarding the PSNR value but does not exceed it.

However, these results can be limited due to the limitation of LSB stomping on LSB 0. As LSB related steganography is known in the security industry and by malicious actors it is typically avoided wherever possible to prevent both steganalysis detection and ensure robustness. As LSB values can be likely to change during routine interchanges or maintenance operations, attackers can either avoid them or opt to encode into LSB 1 or 2 values instead accepting the higher chance of detection. Other more advanced IS methods outlined may use higher LSB values but can be very specific about where they are encoded which could sidestep this mitigation. When the experiment was reconducted when encoding methods specifically avoided LSB 0, the mitigation failed in all cases.

As LSB stomping on values any higher than LSB 0 would result in considerable alteration in the colour space between the original and resulting image this is also not something we considered as viable. Therefore, despite these results being promising the mitigation method was considered too limited to be effective.

DCT coefficient manipulation

Thinking about DCT steganography we decided to try a targeted approach against JPEG images which can support this IS method. Manipulation of the images coefficients is possible and initial considerations were to perform coefficient stomping like the mechanism used in experiment 1. However, instead a factorial modification of the DCT coefficients seemed more effective to minimize the disruption to the image and replicate the distributed modification the original IS mechanism would have achieved.

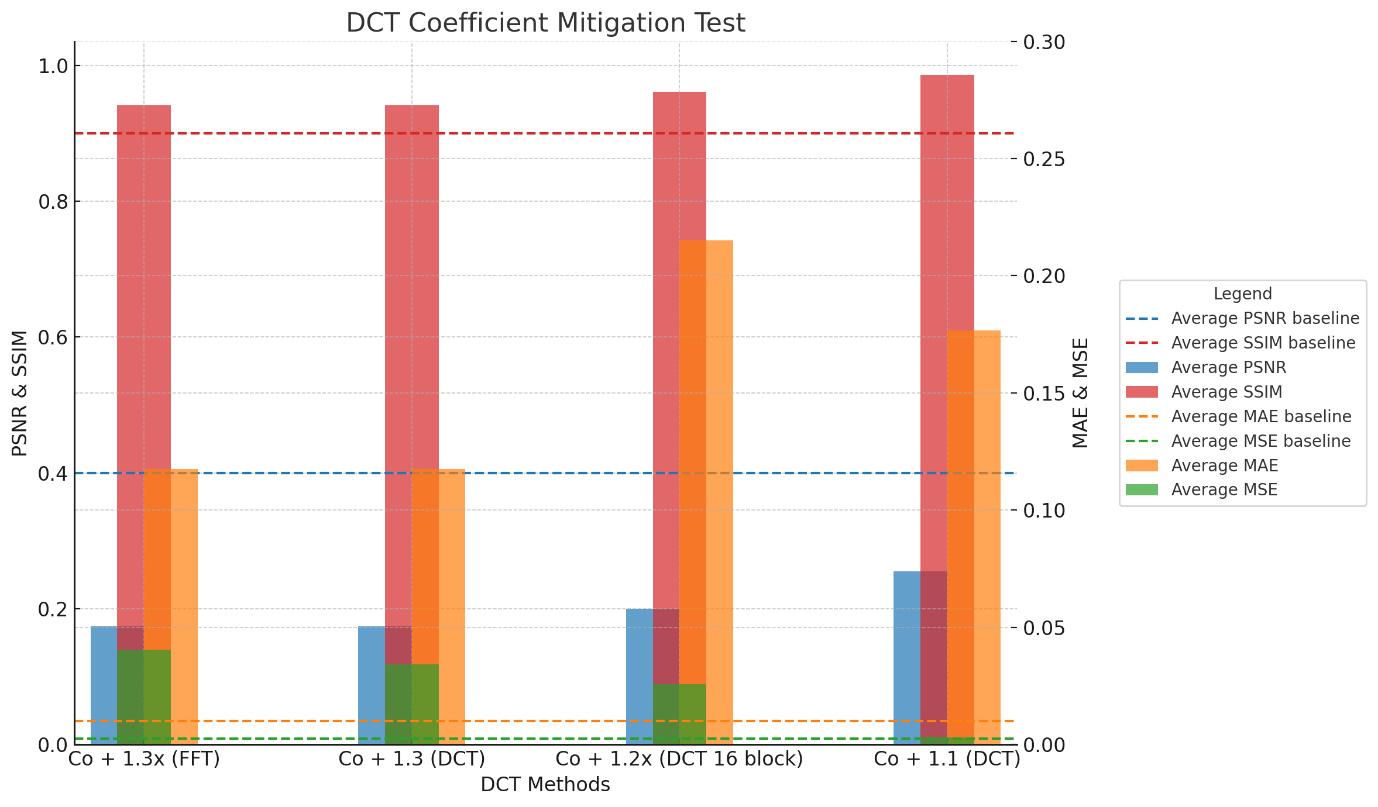

The mitigation method was tested with varying degrees of modification against a set of JPEG images. The first two tests are magnitude 1.3x was conducted twice with two different libraries, FFT and DCT, respectively. The other tested magnitudes were conducted using the DCT library. The results:

| DCT | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| 1.3x (FFT) | 0.174518571 | 0.117555714 | 0.040518429 | 0.941655714 |

| 1.3x (DCT) | 0.174499714 | 0.11759 | 0.034148143 | 0.941644714 |

| 1.2x (DCT 16 block) | 0.20029 | 0.215055714 | 0.025751429 | 0.961205 |

| 1.1 (DCT) | 0.254948571 | 0.176668429 | 0.00294 | 0.986185 |

Table 7 – DCT alteration statistics table

Image 14 – DCT alteration statistics graph

The first thing to note here is the difference between the first two statistics when the same magnitude was applied whilst using two difference libraries. This is due to slightly different methods used to affect the coefficient changes. Between the two the DCT library was found to have less impact and thus could be better.

In all tests, messages embedded using all IS methods were corrupted achieving full mitigation but also had significant deviations observable by the HVS. This is most accurately represented by the high MSE and low PSNR values. As the magnitude change trends down you can see that these statistics begin to approach back to the HVS baselines. The third experiment tested adapting the DCT block size for modification from 8x8 to instead by 16x16 doubling the standard DCT block size. From this you can see the MAE drastically increased despite a decrease in the magnitude used. A consistently positive statistic can be SSIM which indicates that the frequency alterations are affecting pixels in a general fashion rather than a targeted one. This can be because targeted alterations can alter the perceived structure of what is in the image, whereas general modifications achieved in the frequency domain do not have the same impact as the colour space alters more universally. As a result, this modification was not adapted in other magnitudes.

However, even at the lowest coefficient modification the PSNR value was unable to get higher than 0.255. At a magnitude of 1.1x the visual impact was not dramatic but was significant that any sensitive images would be considered corrupt. Therefore, in some cases, this method may not be preferred to other methods.

Noise reduction filter

A noise reduction filter can be a median filter. Median filters work by taking a user defined value and using this to determine the kernel size to be used. The kernel then scans in an overlapping fashion across the pixels in an image, at each stage the median values of the pixels can be calculated and then assigned to the central pixel. Noise filters can be used to retain sharp edges on images whilst removing outlier/anomalous pixel values across an image. Effectively, this filter can have a high degree of image quality retention at the cost of a more limited effect on the range of pixels and noise it has the capacity to correct/alter.

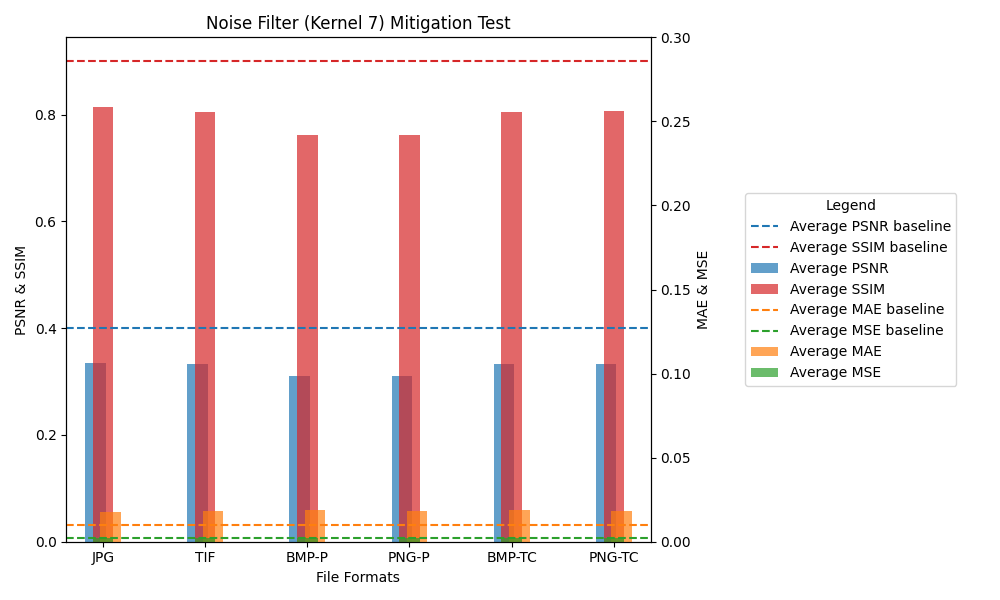

As PVDS and SSIS specifically target edge and noise values, a noise reduction filter was a targeted attempt to address these two IS methods. Our original experiment attempted altering the images using a kernel with a size of 7x7, the idea being that a larger kernel would create a more even modification at the cost of higher calculation resources. The results:

| Noise Filter 7 | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.335361429 | 0.017911429 | 0.001983429 | 0.814094286 |

| TIF | 0.33301 | 0.018072857 | 0.001984286 | 0.805405286 |

| BMP-P | 0.310104286 | 0.01861 | 0.002164286 | 0.762373 |

| PNG-P | 0.310161429 | 0.018565714 | 0.002162857 | 0.762915857 |

| BMP-TC | 0.333041429 | 0.019057143 | 0.001982857 | 0.80549 |

| PNG-TC | 0.333137143 | 0.018004286 | 0.001978571 | 0.806305714 |

Table 8 – Noise reduction filter with kernel size 7 statistics table

Image 15 – Noise reduction filter with kernel size 7 statistics graph

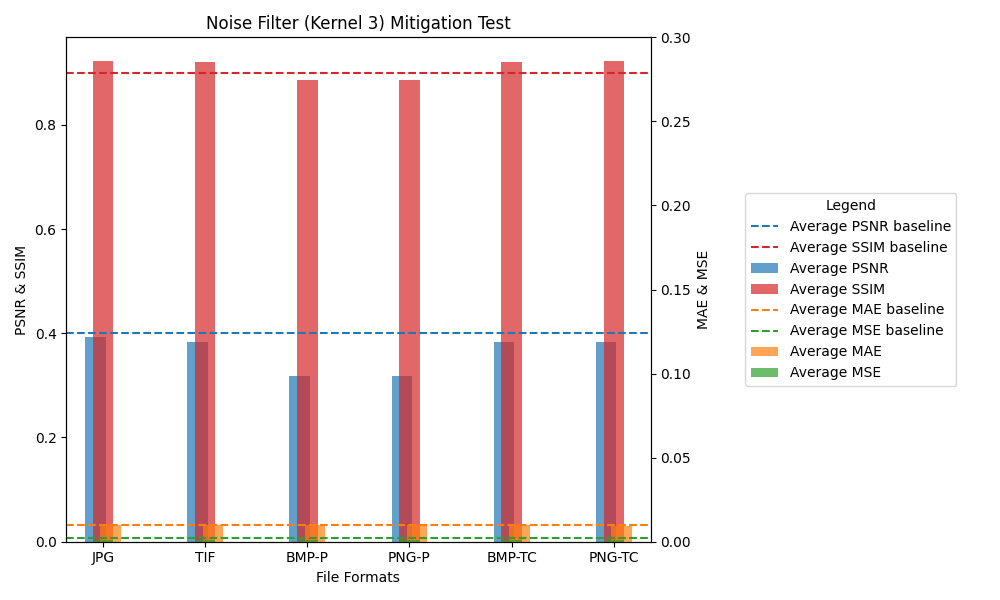

This filter did achieve full mitigation in PVDS and SSIS as well as all other IS methods employed, however as the statistics show the resulting images were degraded to a detectable degree and did not always succeed with mitigating LSB depending on where the linear sequence was injected. All statistics other than MSE, which can be sensitive only to major pixel deviations, were in breach of their baselines. This indicated that this was a potential method but that adjustments to the alteration threshold may be required. To this end we re-conducted the experiment but this time with a smaller kernel of 3x3 making the median calculation more specific to a particular region of pixels.

The results:

| Noise Filter 3 | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.393608571 | 0.010145714 | 0.000780029 | 0.921681429 |

| TIF | 0.383281429 | 0.009665714 | 0.000754286 | 0.920728571 |

| BMP-P | 0.317141571 | 0.010155714 | 0.000915714 | 0.885255714 |

| PNG-P | 0.317313 | 0.010107143 | 0.000912857 | 0.885791429 |

| BMP-TC | 0.383314286 | 0.009661429 | 0.000752857 | 0.92076 |

| PNG-TC | 0.383737143 | 0.009587143 | 0.00075 | 0.921502857 |

Table 9 – Noise reduction filter with kernel size 3 statistics table

Image 16 – Noise reduction filter with kernel size 3 statistics graph

The reduced kernel size and subsequent targeted pixel alteration still achieved full mitigation with PVDS and SSIS and all other IS methods again but with a much more promising statistical output. When palette tolerances are accounted for, the resulting statistical differences are much closer to the required baselines but are still breached in relation to the PSNR and SSIM. As the SSIM statistic directly corresponds to noise/corruption being introduced in the image in a perceptible way when compared the original this is a significant deviation. However, the results were much closer to the required limits than the prior larger kernel results. When compared by the HVS it was difficult to perceive differences, however, as the experiments were conducted against a small sample set of images the baselines are enforced to correspond to all images. Due to these factors, this mitigation method can, in some embodiments, be rejected as a potential general mechanism.

Smoothing filter

A smoothing filter can be like a noise reduction filter but instead uses averaging of colours over a specific kernel rather than a median. Smoothing can be typically applied to either a specific region during photo editing or generally across an image to reduce noise and produce a “blur” effect. The filter can be effective at removing artifacts/noise and also can be used for pre-processing other functions such as edge detection by removing minor variations detected in edge pixels making them easier to detect. Whereas a noise reduction filter has the effect of preserving and refining sharp edges, a smoothing filter can have the inverse effect by instead slightly “blurring” the edges as well as other noise pixels in an image. This gives a more general noise reduction capability with the trade-off of a more significant alteration of the image.

A smoothing filter can have a more general mitigation capability against a range of IS techniques due to its less targeted intended alterations. This means that opposed to a median filter, the average filter used in smoothing can be more effective against all forms of IS rather than those that focus on specific regions.

Another factor to consider is that smoothing filters can use either uniform of gaussian weighted kernels to make the alterations more relative to the central pixel and maintain structural relativity. This can be done by adapting the weights of the kernel relative to the central pixel, with the central weight being significantly higher than the surrounding pixels. This means the influence of pixel values on the resulting alteration decreases depending on their perceived distance from the targeted pixel.

In our experiment, we used a standard 3 x 3 kernel size with the gaussian weighting and results:

Matrix 1 – Gaussian weighting used in smoothing filter 3x3 kernel

| Smoothing | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.415606714 | 0.009175714 | 0.000558581 | 0.944788571 |

| TIF | 0.408596714 | 0.008201429 | 0.000404948 | 0.960213571 |

| BMP-P | 0.378051571 | 0.009721429 | 0.000458043 | 0.945604286 |

| PNG-P | 0.378190143 | 0.00969 | 0.000458043 | 0.94584 |

| BMP-TC | 0.408627 | 0.008198571 | 0.000403661 | 0.960227143 |

| PNG-TC | 0.408958429 | 0.008147143 | 0.000402233 | 0.96059 |

Table 10 – Smooth filter application statistics table

Image 17 – Smooth filter application statistics graph

The results from this experiment were extremely promising with the statistics all being within the baseline specified by the HVS. When palette allowances are considered, all the statistical metrics can be within the threshold with the effect observed on the files being minimal or unobservable when considered as a whole image. However, this may not be directly reflected in the mitigation capabilities of the filter. It was found that LSB and PVDS IS using a linear sequence encoding could avoid mitigation in some cases where the image was simple. This was interpreted as a limitation of the filter to apply weighted alterations across already similar colour range in a linear space

Due to the use of gaussian weighting the mitigation against techniques such as PVDS when used in a linear instead of edge targeted fashion could be worse. This indicated that minor variations in linear sequences may be less likely to be mitigated than those found in outlier pixel values such as those encountered in noise and edges generated by more advanced techniques. As such, this had a limited success profile but so far, the most promising results statistically.

File bouncing

File formats can be typically broken down into two primary categories, lossy and lossless. The latter format type can be defined when the pixel information can be compressed and altered by the specification for storage, but upon rendering will always have the original pixel values regardless of the format transformation. Lossy formats instead allow for data loss to maximise compression and minimize required memory space. One such format we mentioned in Chapter 2 is JPEG.

Due to this expected quality degradation in JPEG format transformation, we considered whether this could be a viable mitigation strategy. The process of JPEG transformation can be analysed at a low level for potential mitigation use. The two primary steps during the transformation process which causes image degradation are the colour translation and quantization phases.

When an image is converted to JPEG, first in the spatial domain, the colour space is converted to JPEG standard which is typically the YCbCr colour space and secondly once converted to the frequency domain quantization takes place. In YCbCr, Y is the luminance value and Cb and Cr are the chrominance components of the blue and red differences. This translation causes artefacts to be introduced into the colour space of the new image as the RGB values are not represented completely accurately in the YCbCr colour space, however the differences can be considered imperceptible to the human eye. Another consideration is that different systems can have different translation equations meaning that images created in the JPEG format by one system may have slight variations when created in another system. These variations are typically in what range of values is permitted/expected and accounting for potential negative values (by removing them).

Formula 10 – RGB to YCbCr conversion formula accounting for negative values

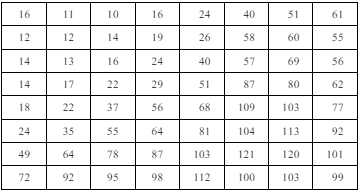

Quantization can be a mechanism applied to the frequency domain of the image after it has undergone DCT transformation. Once the image has been broken down into (typically) 8x8 grids of coefficients representing 8x8 grids of pixels, quantisation can be applied to squash higher frequencies in that grid. The coefficients represent a range of oscillating frequencies relative to the values of the pixels in the original image. Lower frequency coefficients represent base information like large area colour bases for the image whilst higher frequency coefficients represent fine detail such as edges or sharp changes.

Higher frequencies are targeted in this step as the HVS can be less adept at detecting reduction in the fine details relative to the base details and as such can cause perceptible degradation. The quantization itself can be conducted using an 8 x 8 “quantization matrix” which is made up of a pre-set of values. The JPEG specification “itu-81 – Section K.1” [2] outlines an example base matrix for luminance quantization with 50% quality retention can be:

Image 18 – ITU-81 JPEG specification basic quantization matrix [2]

The matrix can then be applied to the coefficients of a block by dividing the corresponding entry by the quantization weight and then rounding to the nearest whole integer. These values are what are then used in compression. When the image is rendered, the quantized frequencies are inversely multiplied by the values of the quantization matrix to reconstruct the DCT values. However, as there is a rounding operation during quantization this can lead to deviations between the original and reconstructed frequency values. In the frequency domain matrices, the lower frequencies can be represented at entry 0,0 (top left) and highest frequencies in at entry 7,7 (bottom right). This is reflected in the quantization table above, with the weight trending upwards towards the higher frequency coefficients meaning they can be impacted more.

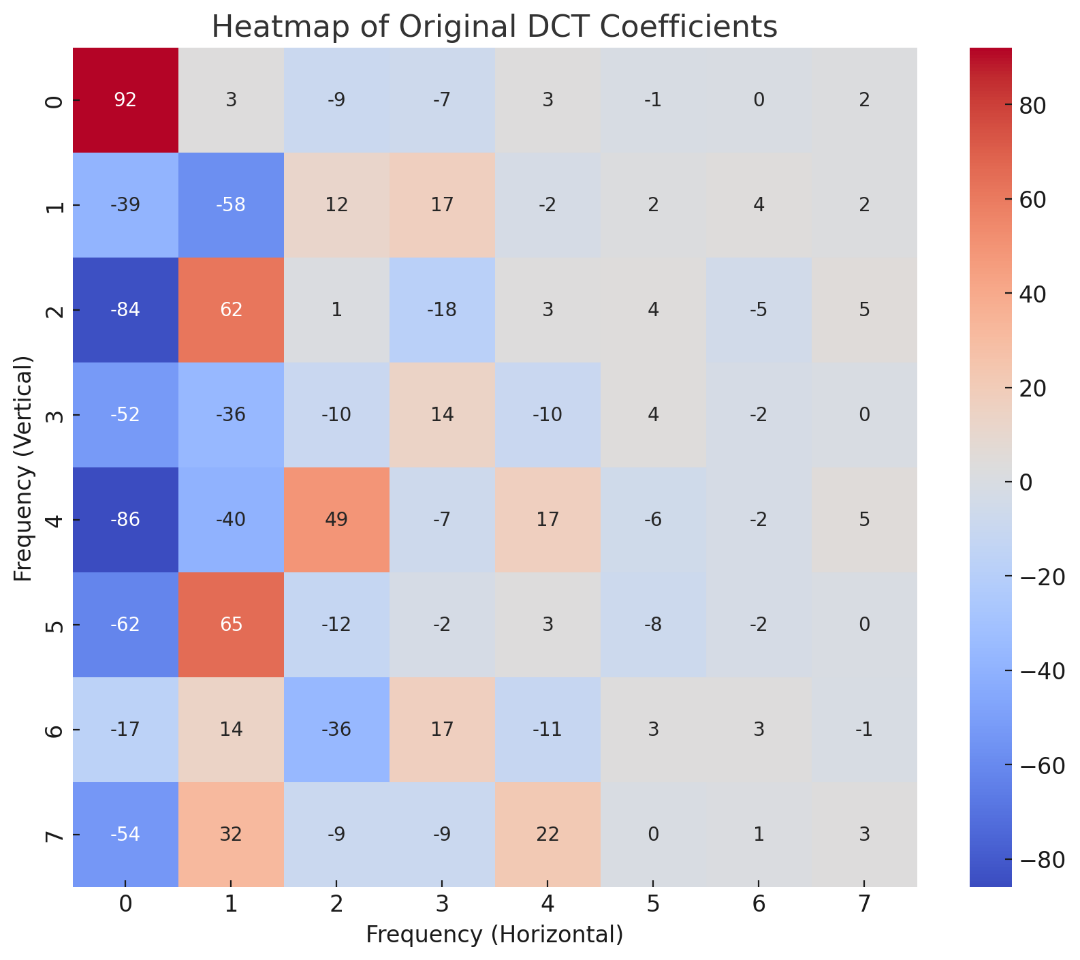

A practical example is provided below:

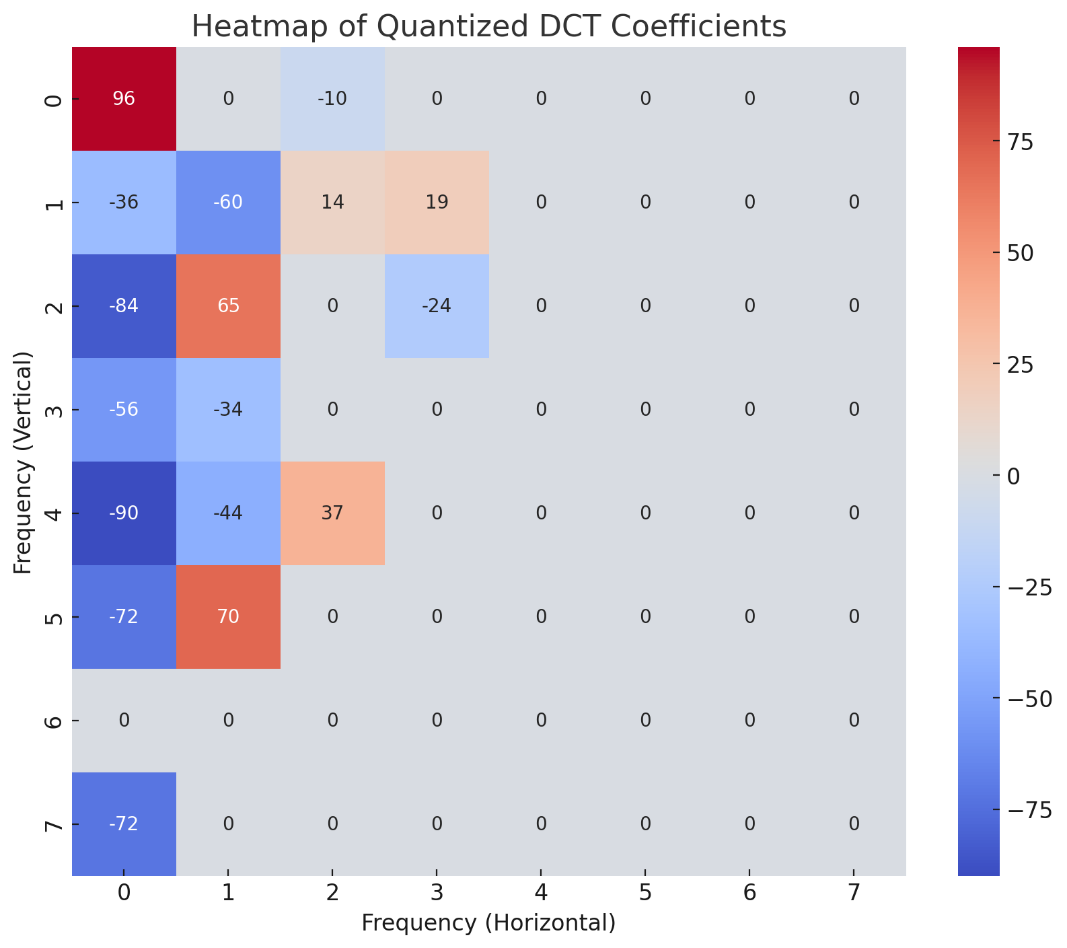

Image 19 – Original DCT coefficients of image

Image 20 – Quantized DCT coefficients of image after reconstruction

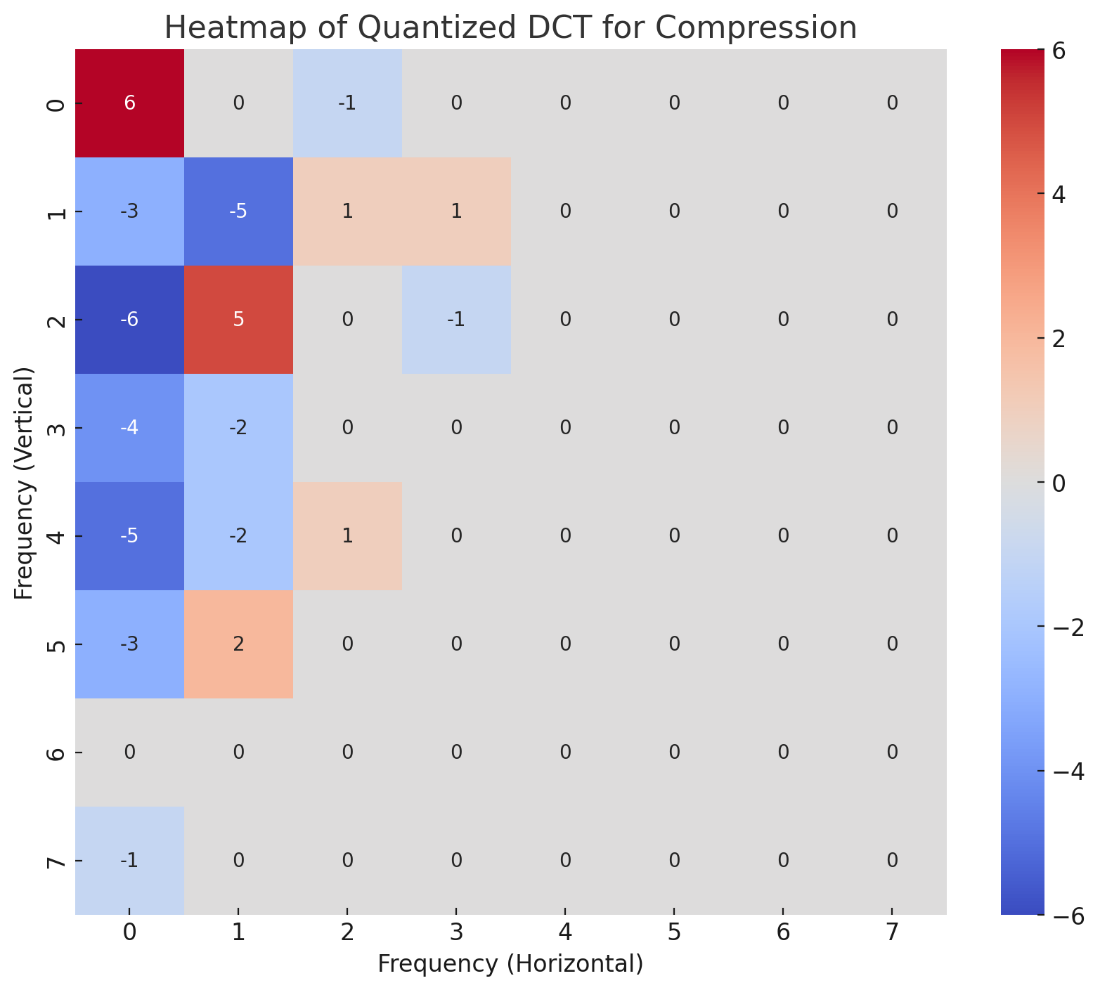

In these two heatmaps above we have the coefficient values which are generated during the DCT translation of a standard image. These values from top left to bottom right indicate low to high frequencies which occur in an 8 x8 pixel grid. The second image shows the same coefficients after quantization has taken place and the coefficients have been reconstructed. In the second heatmap, we can see that most high frequency coefficients have been resolved to 0 because of the weighted values in the quantization matrix. Additionally, even the lower frequency values have been adjusted slightly with only the value “0,2” retaining its original value of -84. This results in a distributed alteration of the pixel values that are generated when converted from the frequency domain back to the spatial domain.

This can be conducted, and the quality decrease can be accepted, as part of the JPEG format to allow for maximum compression. Using techniques such as Huffman Coding, resolving most of the matrix values to 0 means that a high level of compression can occur. Additionally, the range of the other coefficients in its compressible format are normally between 0 and +/-10 further adding to compression capability. The heatmap of what is compressed is the quantized DCT coefficients before reconstructing/multiplying the by quantization matrix. The compressed heatmap in this case looks like:

Image 21 – Quantized DCT coefficients for compression

The quantisation values of the matrix can be adjusted via a “quality” variable, which can be specified by a user. This variable adjusts the weighting of the quantization matrix to perform higher or lower weighted quantization against the coefficients depending on the tolerance for deviation.

So, if we bounce a lossless file to a lossy format and back again, we can expect the values of the pixels to be affected. If we bounce a lossy file to a lossless format and back to lossy, we can also expect pixel values to be changed as a result. This is due to the colour translation phase introducing artefacts and potentially using a slightly different equation as well as the quantisation phase potentially using slightly different matrix values, either due to base values being different or the quality variable being different.

In this process, we conducted this file type bouncing with a quality variable of ninety-five with the following results:

| File Bounce | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.581620857 | 0.000790899 | 8.60089E-06 | 0.998330143 |

| TIF | 0.430017143 | 0.006327143 | 0.000131429 | 0.968938286 |

| BMP-P | 0.371294286 | 0.010245714 | 0.000257143 | 0.9341 |

| PNG-P | 0.37125 | 0.010255429 | 0.000257143 | 0.9336 |

| BMP-TC | 0.429988571 | 0.006307143 | 0.000131429 | 0.968898571 |

| PNG-TC | 0.430584286 | 0.006262857 | 0.00013 | 0.969382857 |

Table 11 – File Bouncing statistics results

Image 22 – File Bounce statistics graph

This can result in universal mitigation across all IS methods, low impact on the resulting image with palette accommodation and a low resource overhead. Statistically this mitigation method may not breach any set baseline and may not result in any perceivable difference between the original and resulting image as judged by the HVS. This method may have the additional benefit of leveraging a technology which has been refined over a longer period to make it efficient and swift with the conversion operations consuming very little local resources as opposed to other more mathematically intense filters such as a median filter.

As the quality retention variable was set at 95 in these results, further degradation of the image may not be required. As such, using 95 as a base for this filter may be deemed effective; additional degradation, may be employed in a lower risk tolerance environment.

Additional Mitigation Details

Assessment of the statistical output related to degradation and the binary value of whether steganography mitigation was achieved identified some potential mitigation methods. In some cases, targeted methods of mitigation for specific IS methods can be too costly in terms of image degradation or too specific to be effective in all cases of the IS method being targeted. As such, general methods of mitigation can be more effective in both HDS corruption and image quality retention.

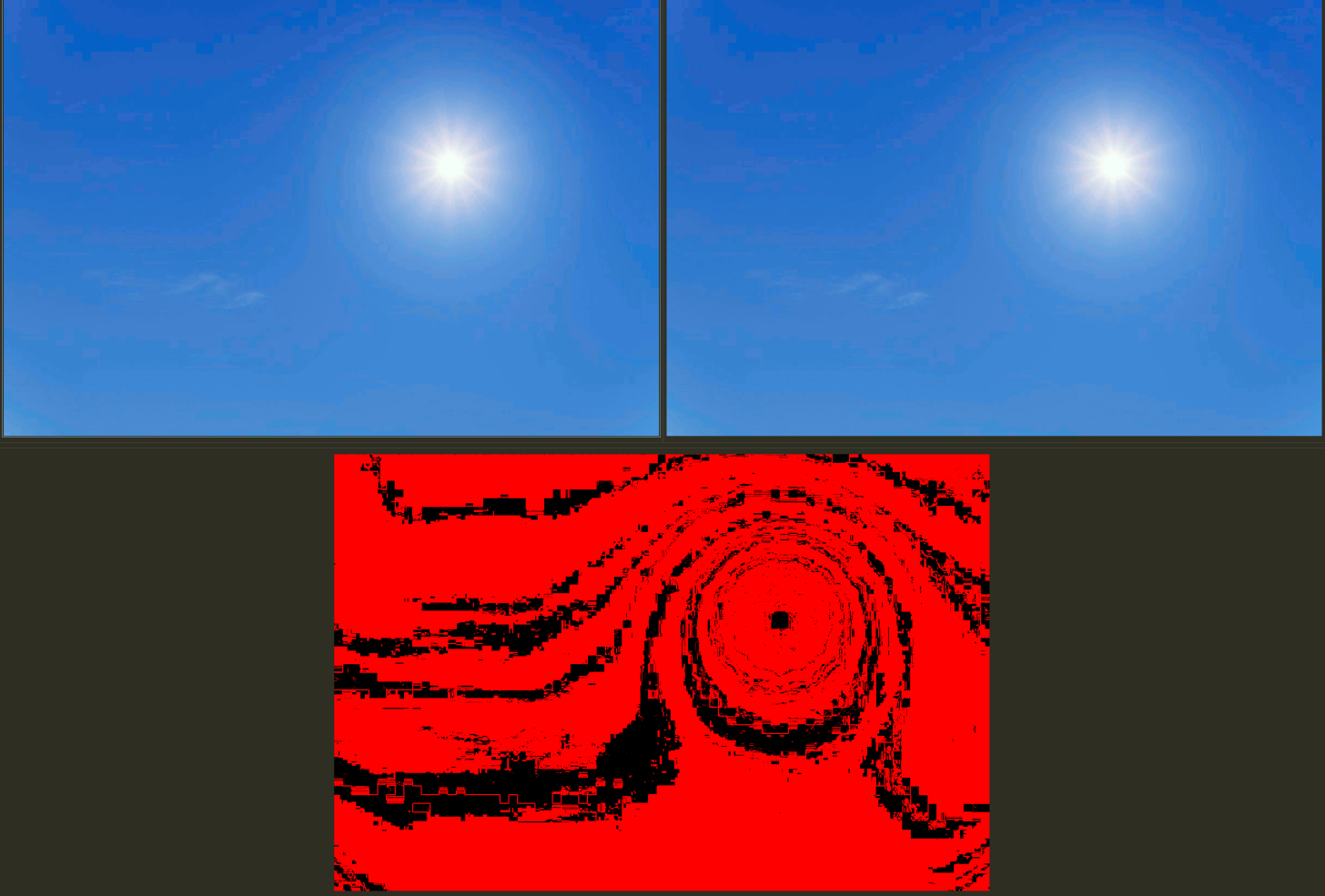

File Bouncing can be considered a successful mitigation method due to its distributed alterations of pixel values, its removal of noise (high frequencies) and its exceptional retention of quality. As this method can have a low overhead, it can be practical for large-scale implementation or as a routine operation. A data comparison utility can be used to observe the pixels affected by this process in the following image.

Image 23 – File bouncing pixel alteration map

Smoothing filter can be an effective method due to its target on noise reduction and universal applicability with relatively low impact. As the resulting image can be more degraded relative to file bouncing this method may be more practical in some use cases than others. Its mitigation effectiveness can also be extremely high with only some edge case implementation of IS being less mitigated or not being mitigated. This filters application can also be extremely fast with the low overhead and so can be applied swiftly to many images.

Image 24 – Smooth filter pixel alteration map

Finally, palette shuffling can be considered a standard practice for all palette based images. As the resulting image is identical to the original due to colour values not being altered between the two versions, this can have no negative effects on the processed image. As this can help mitigate IS using basic palette based methods there may not be issues in its deployment in most cases. However, as this does not consider potential resequencing it can be paired with a method which directly alters the colour values within the palette also to ensure improved mitigation.

Image 25 – Palette based image file bouncing pixel alteration map

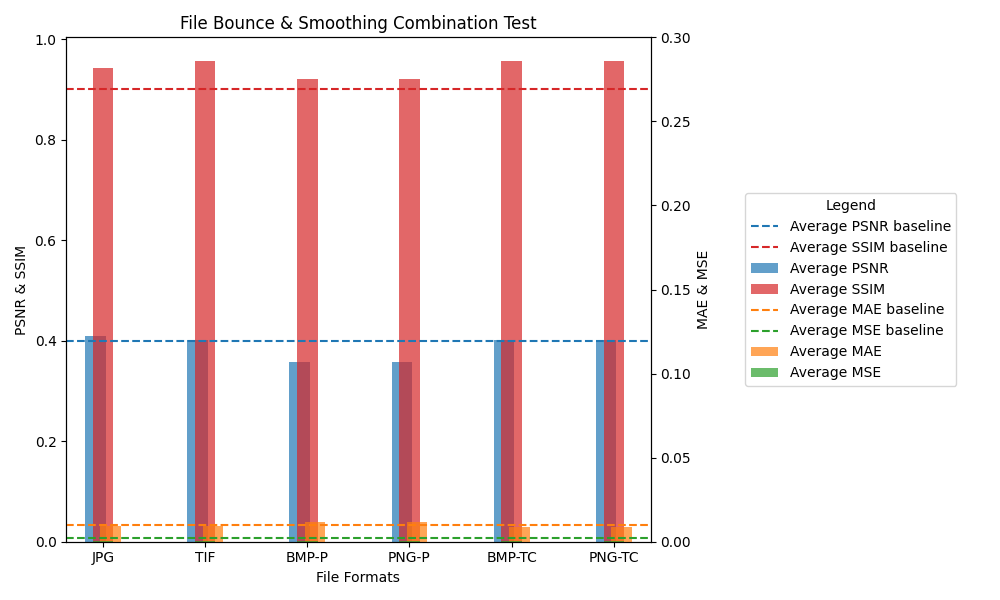

When testing the combination of file bouncing and smoothing, it was found that the resulting image when averaged over the test set did not breach the benchmarks. This shows that they can be used safely in combination with increased, but not significant, visual degradation.

| Smooth & Bounce | Average PSNR | Average MAE | Average MSE | Average SSIM |

|---|---|---|---|---|

| JPG | 0.410240571 | 0.009467143 | 0.000571441 | 0.942757143 |

| TIF | 0.401245714 | 0.009065714 | 0.000418686 | 0.95563 |

| BMP-P | 0.357571429 | 0.011932857 | 0.000535714 | 0.920425714 |

| PNG-P | 0.357585714 | 0.011922857 | 0.000534286 | 0.920384286 |

| BMP-TC | 0.401408571 | 0.008805714 | 0.000417257 | 0.955687143 |

| PNG-TC | 0.401357143 | 0.008831429 | 0.000417257 | 0.955425714 |

Table 12 – File Bouncing & Smoothing combination statistics

Image 26 – File bounce & smoothing combination statistics graph

Weaponizing statistics against IS

How this can be used in general

These mitigation methods can be applied to almost many image files that are encountered. This can be done on a file by file basis or for entire directories or file streams. This would, for these batched image files, achieve a high level of confidence of any IS present being corrupted while the resulting image is just as legible and structurally like the original.

When integrating mitigation there are several considerations. Certain image types may have structural capabilities that differ from other formats which will be required to be accounted for. Image formats such as GIF which can have multiple panels/images in the same file is one such format may require special handling. In addition, selection of tools and libraries which can achieve these image processing methods may not all work to the same standard calculations or base values when calculating alterations. As such, it is important when deploying such mitigation that the mechanisms employed are fully understood and the internal metrics and equations are in-line with the expected results.

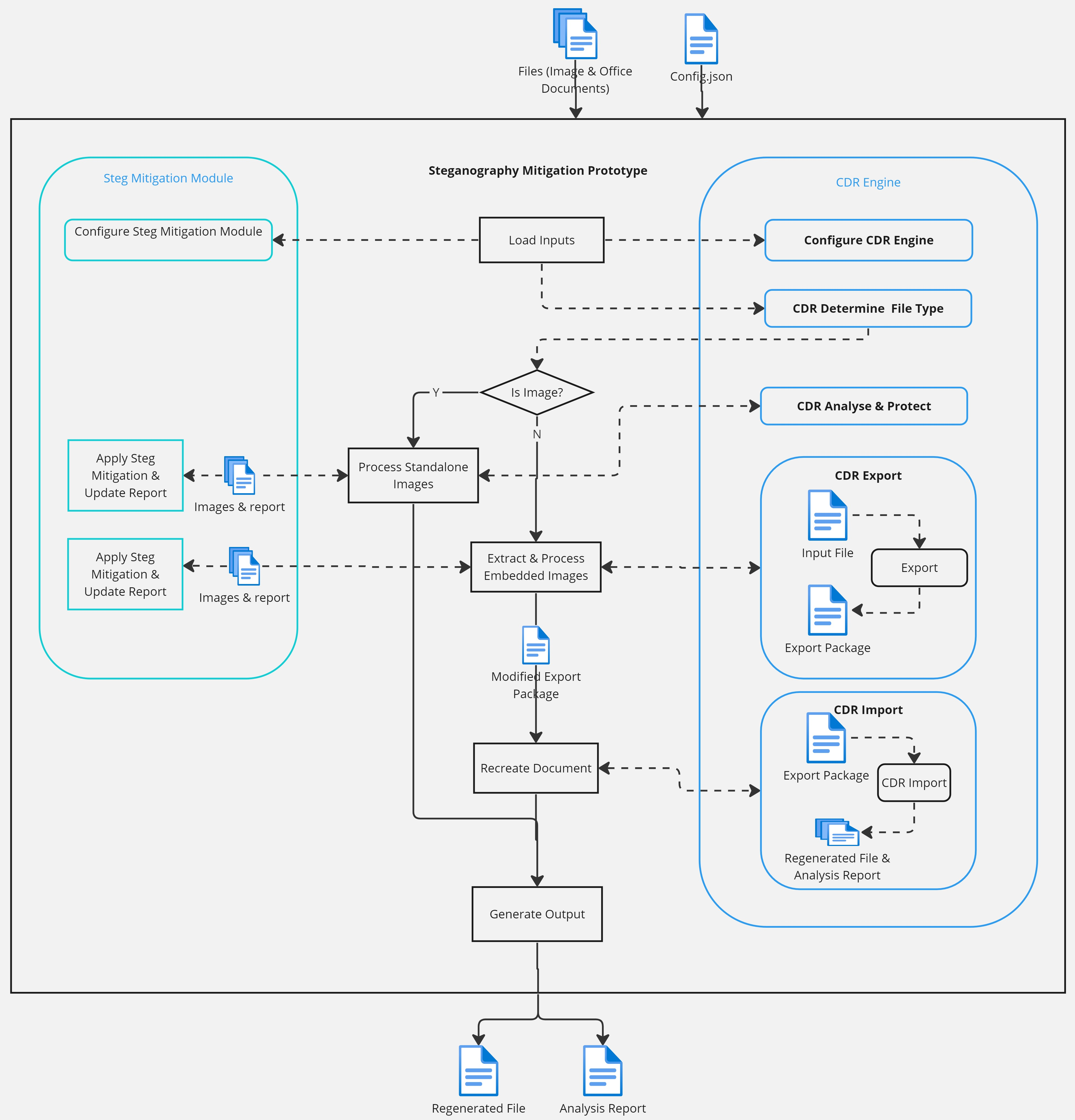

Pairing with Content Disarmament and Reconstruction technology – IS has nowhere to hide

The IS solutions described herein can be used with CDR technology. In particular, file handling and specification adherence used in a CDR system can be incorporated in the IS solutions described herein. to the solution. As CDR can be tailored to handle file formats of a wide variety of types, including image types, we can ensure that the internal structure is as we expect and analyse each component individually. This can ensure that the image is within specification and not corrupt or masquerading as something else. This can also allow the handling of file type specific use cases such as multiple panels in the case of GIF, dynamic palette sizes and handling of images using transparency palettes.

A benefit to incorporating CDR technology to the mitigation process is the handling of embedded images. Image files may be transmitted as standalone files; however, it is increasingly common that the image may be embedded within another file such as an Office document, PDF, or even an email file. CDR has the capacity to extract/export the embedded images, process them as standalone files and then reintegrate/import them to the original document without any deviation being detected. This is a complex process which is extremely difficult to achieve especially when binary format files are considered such as those in old Microsoft Office documents.

Although extraction and mitigation of embedded images may be possible without CDR, it can be highly likely that the document will either have the images removed or that the resulting document will be heavily modified. This is normally caused by misalignments in the graphical representation but can also be caused by errors in the memory offset of the embedded files within the binary structure of such files. CDR accounts for all these cases and allows for mass processing of both standalone and document files.

Image 27 – CDR processing flow chart for both standalone and document files

An additional benefit of CDR integration is the use of security profiles based on risk tolerance. Whereas automated deployment may be effective on batches, migration of files between different domains with potentially different risk tolerances can be complex. CDR is designed to sit on domain boundaries with each boundary having its own configuration. As such, we can employ palette shuffling on files entering high tolerance domains and palette shuffling, file bouncing and image smoothing on migration to low tolerance domains. Additionally or alternatively, filter intensities can be adjusted per profile also meaning that in the case of file bouncing the quality variable can be decreased in-line with risk tolerance to achieve a higher level of distortion on the images. This means that where security rather than usability is the primary concern alterations can be made appropriately.

Conclusion

From our analysis, we have assessed and understood some of the most prevalent IS method that are commonly and widely available today. Our assessment shows that the ways IS encodes data into images is varied with differing levels of complexity depending on their aversion to detection or anticipated scrutiny. As such, a general mitigation solution is something that can be a valuable tool in any security arsenal to proactively defend against such secret communications channels.

As described herein, mitigations can be applied in novel and unique ways to address different IS methods. Malicious actors may not expect the encoded images to be put through image filters and/or type conversions as a default action. Although they may encounter compression in some systems, most file transfer operations will not conduct any image specific operations. As such they have been able to use IS, with considerations for their target’s security infrastructure, at will.

Employing these mitigation methods as a standard against all images that enter an estate will eliminate this covert channel in most cases, if not all of them. As described herein, CDR with IS mitigation can address the use case of embedded files.

As attackers typically try to blend in with anticipated traffic, sending an office document to a recipient on a regular basis as opposed to an image is less likely to draw attention. Additionally, embedded images are much harder to deal with due to the inherent issue of extraction and reapplication. Reapplication is a primary issue with any slight modification to images in documents potentially causing multiple format issues which can make them incomprehensible or even worse corrupt.

CDR technology holds a potential solution to this by being built specifically to achieve this extraction and reapplication process at scale. By incorporating the image processing technologies described herein into the control flow of a CDR verification operation, IS mitigation can be achieved in many if not all formats whether they be standalone or embedded. This leaves malicious actors trying to use IS with nowhere to hide.