Author: Connor Morley (Principal Malware Security Researcher)

Purpose outline

This document provides a summary of Glasswall’s research into the threats posed by polyglot files including how they are created and how they can be effectively mitigated. It is intended to offer readers:

- The threat posed by polyglots, their malicious application, and reasoning for use in operations.

- Limitations in mitigation via existing/traditional security systems which depend on file type determination.

- Information on how polyglots are used in real-world scenarios as well as hypothetical deployments.

- An explanation of the various types of polyglot creation methods as well as their strengths and weaknesses.

- Technical description on how polyglots can be created using various file formats as the container.

- An understanding of the Glasswall CDR approach to neutralise polyglot threats in processed documents.

- An overview of the progressive research conducted at Glasswall to address both polyglots and other future threats.

Summary

This research explores polyglot file structures and evaluates how Glasswall's CDR technology can mitigate their risks during file processing. Our initial focus is on image formats due to their simpler structures, allowing for rapid advancements in this research area.

The primary goal of this project is to develop a universal approach to mitigating polyglots based on their core file type, detecting file type variations, and ultimately extracting polyglot elements - prioritized in that order.

Here we examine different polyglot variants, their deployment methods, and additional obfuscation techniques, and also provide insights into how polyglots are actively created, offering valuable knowledge for threat mitigation.

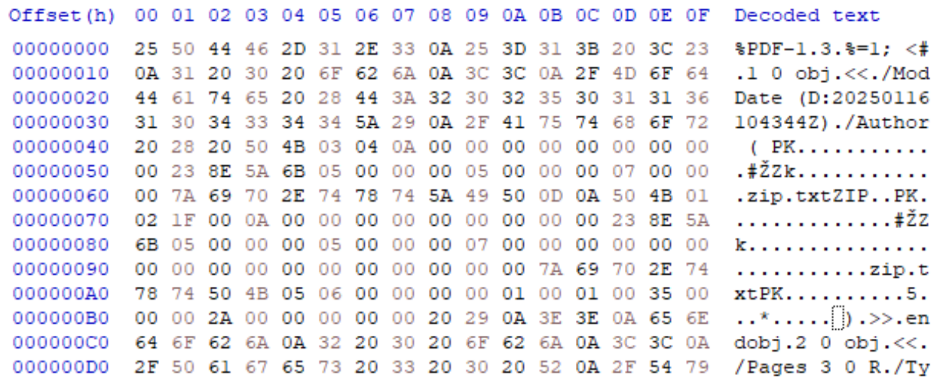

What are polyglots?

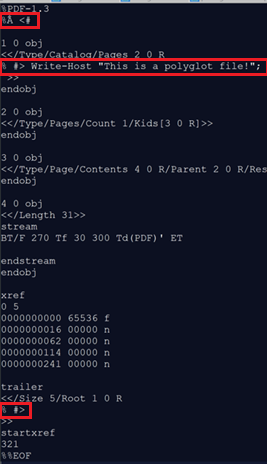

The term polyglot in common terminology refers to someone who speaks/reads multiple languages. Therefore, someone can be a polyglot if they can integrate themselves into various cultures or social circles. In a technological sense, polyglots are files which can be interpreted as multiple file types whilst all residing in the same file system entry/memory space. This results in a file which, when opened in an image viewer, displays a picture, when run through Adobe Acrobat, displays a PDF document and, when run through a decompression tool, unpacks as a compressed archive.

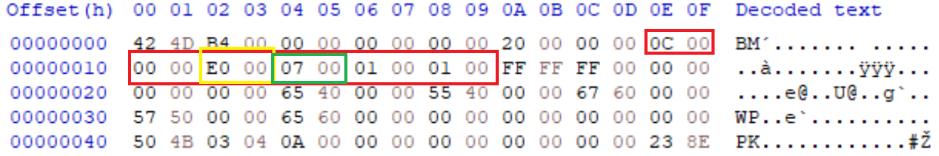

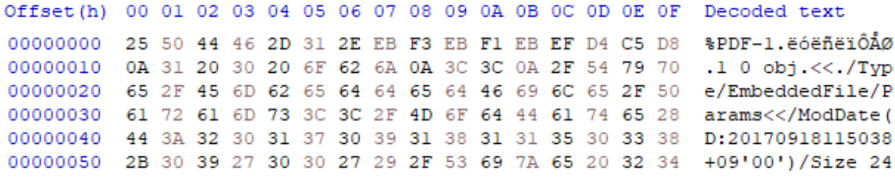

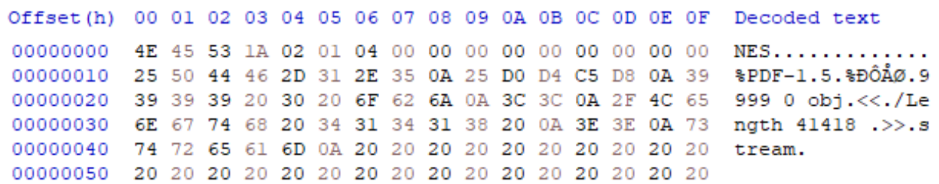

These files are possible due to quirks in the specification of various file types as well as the potential for interpreters of various scripts being exception-tolerant. A prime example of this is the PDF specification, which permits the header identifier (or magic bytes) of “%PDF-“ or in hex “25 50 44 46 2D” to be located anywhere within the first 1KB of the file’s memory space. Due to this tolerance in the header location, it opens the door to other file headers being introduced within the first 1KB or even stored entirely within the 1KB limit. Depending on the specification of the inserted file type, the PDF file can either be encapsulated within legitimate components of the new file type or simply appended to the end of the inserted file type, the result being that the file is now both a PDF and an inserted file type legitimately.

Why are polyglots an issue for security?

Most security systems, including Glasswall CDR, rely on interpreting the file type being processed correctly to know how to parse and inspect the file for specific or anomalous content. As polyglots rely on their structures being legitimate and therefore within specification of the host document, they are not considered corrupt or malformed, and so file identification systems will typically identify the file as the most “obvious” file type present. This is done by either examining the file extension, mime type or magic byte values. However, as a polyglot can contain multiple legitimate magic byte entries/headers and a profiling system only looks for the most “obvious” file identifier, it can ignore potentially suspicious content.

Let’s think of an example where a file looks like a PNG image file but is also a legitimate PDF file. The PNG is harmless; however, the PDF file contains auto-open commands and a highly malicious JavaScript payload. The security system determines the most "obvious" file type to be a PNG and so analyses the file as a PNG, finding no suspicious content, malicious functionality, or malformations. As the PDF code is contained within a comment tEXt or iTXt block of the PNG, it is processed as a metadata text block which cannot be acted upon directly by an interpreter. As such, the comment content poses no active risk, and the content is deemed to not be a threat. However, upon delivery, the file has no extension and is opened in a web browser by default, which interprets the file as a PDF, triggering the internal payload.

The above is a basic example of the risks associated with polyglots used for malware deployment, but the risks also extend to both infiltration and exfiltration of data. From a CDR perspective, the same applies, if we are not interpreting the file types integrated into a file we are not validating and applying content management schemas to all the present files. In the example above, the installed and legitimate PDF file will not be verified by CDR, and its existence in the PNG will not necessarily be sanitized depending on the current content management profile.

Our objectives

Our primary internal objectives for this project, in descending order of priority, are to:

- Ensure Glasswall CDR mitigates/removes polyglots

- Images

- Documents

- Archives

- Executables

- Detect present polyglot file types

- Extract polyglot file sections into separate files for CDR processing

As we are focusing on zero-trust architecture and there are limited legitimate situations where polyglots should be used, a default control to mitigate polyglots is the priority. This mitigation should aim to remove all elements in a file which can or (when scanned) are potentially being used to support a secondary polyglot file type whilst ensuring the “obvious” or primary file type is not corrupted as a result. This methodology is to be applied to image, office, archives, and potentially executable file types. As image file specifications are much more simplistic, they provide less scope for polyglot file injection methods relative to more complex structures. Therefore, image types were addressed first to prioritize security capability enhancement deployment into product systems. As the project progresses, more complex file types will be addressed, which will result in the resources required subsequently increasing and feature enhancements rates decreasing as a natural result.

Detection of the installed polyglot sub-types is a secondary objective relative to the core zero-trust functionality our CDR prioritizes. Detection is a commonly addressed issue with several projects focused on this area, with mixed results highlighting the complexity of the problem. However, when detection is implemented, this information can be used as threat intelligence to help analysts determine what potential risks were being introduced and from where which can be invaluable.

Extraction of polyglot sub-files in an advanced and purely optional third objective which would aim to leverage the results of the secondary detection objective to isolate the sub-type files and their locations. From this, using the identified header’s locations, we can determine the memory location within the polyglot hosting the relative file sections and extract them to a new memory location, resulting in the full export of the sub-file for CDR processing or secondary security analysis.

Types of polyglots

Polyglots come in a variety of types, reflecting the way the sub-type files are inserted into the primary/host file. These range from simplistic to extremely complex, with some variants only being supported by a very limited subset of file types. As such, not all polyglot variants are supported by all file types and are typically limited by factors such as header/magic byte offset location limitation, internal component capabilities and non-parsed segment availability.

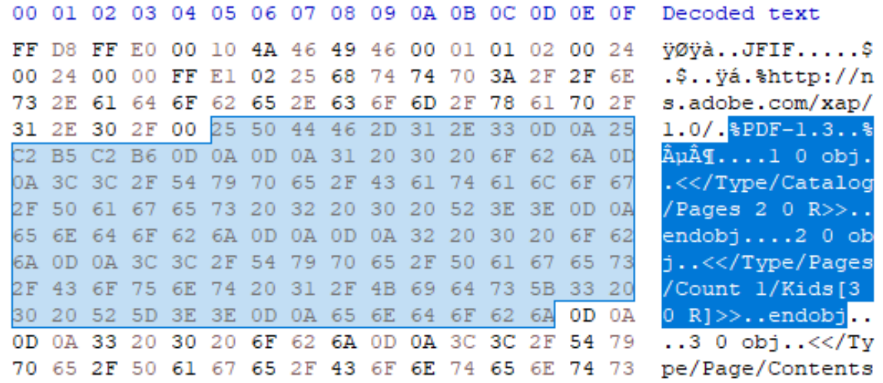

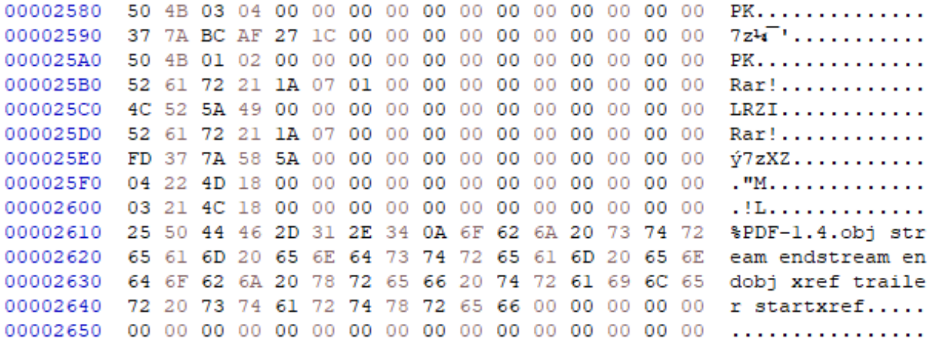

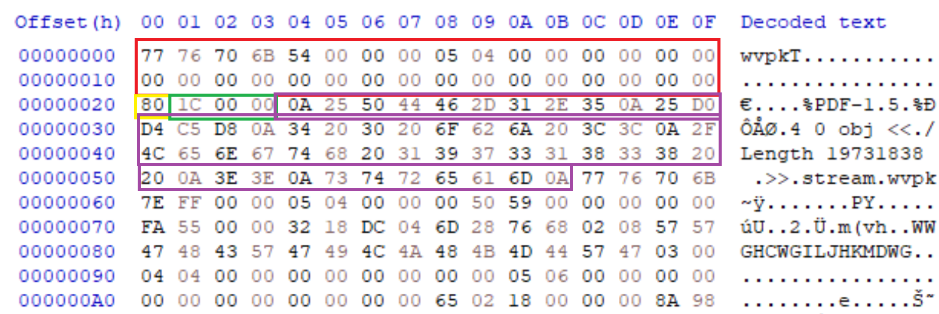

Stack

Stacks are the most simplistic of polyglot structures and only require the file types to be “stacked” on top of one another. In practice, this is achieved by appending the data which makes up the sub-type file onto the primary file with the two files occupying the same file entry. This type of polyglot is limited to sub-types which do not have a header offset limitation or who’s interpretation is inverted (read from the bottom up), such as ZIP files. In ZIP files, the “header” or primary segment that is used to identify the file is located at the end of the file rather than the beginning. As such appending a ZIP file to most other file types will achieve a supported legitimate polyglot which is both a standard file and an archive.

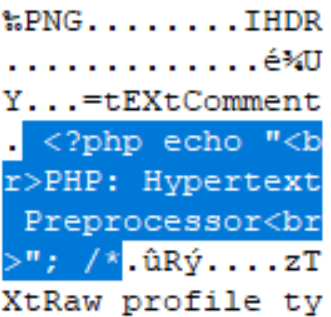

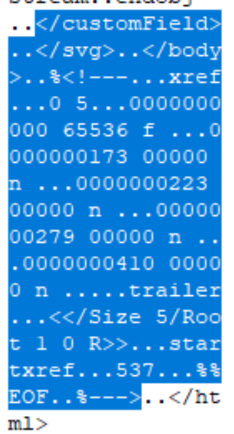

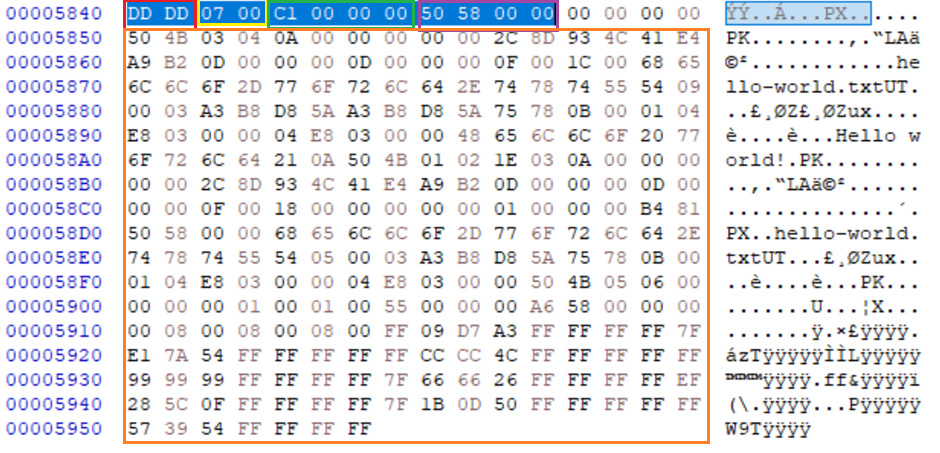

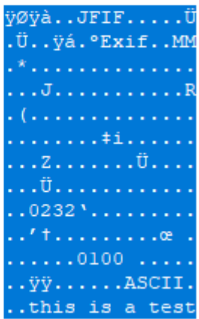

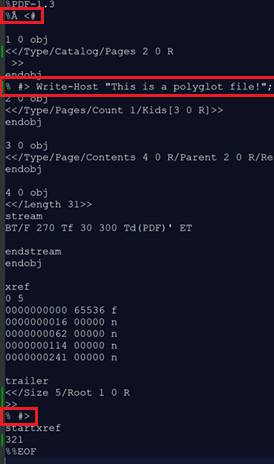

IMAGE 1 – Polyglot stack configuration

The primary limitation of these types of polyglots is that they tend to only have one sub-type file injected, unless paired with one of the other polyglot insertion techniques. Additionally, the appended sub-type file is typically added post the End Of File (EOF) identifier or final entry of the primary file. Although interpreters will allow the two file types to be handled legitimately, analysis of the file for the location of EOF specifiers can allow for quick resolution of this polyglot variant by truncating all data post the EOF. As this is the simplest polyglot variant, it also has the simplest solution.

Parasite

Parasites are more complex and involve the embedding of the sub-type file into legitimate components of the primary file type. This can be achieved in several ways such as taking advantage of embedded file capabilities or even injecting in metadata components which are not parsed/processed by most systems for active rendering. Regarding the latter, PNG or GIF are great examples where comment or text segments are outlined in the specification for the addition of metadata but are not actively used by almost any system.

Using the example previously of a PDF embedded in a PNG, this is achieved via the parasite technique. The PNG has a tEXt segment specified near the start of the file, which is populated with the PDF file data. If the tEXt segment size is correct and the contents within the specified format of ASCII, the image file is not corrupted, and both the PDF and PNG can be interpreted from the same memory location.

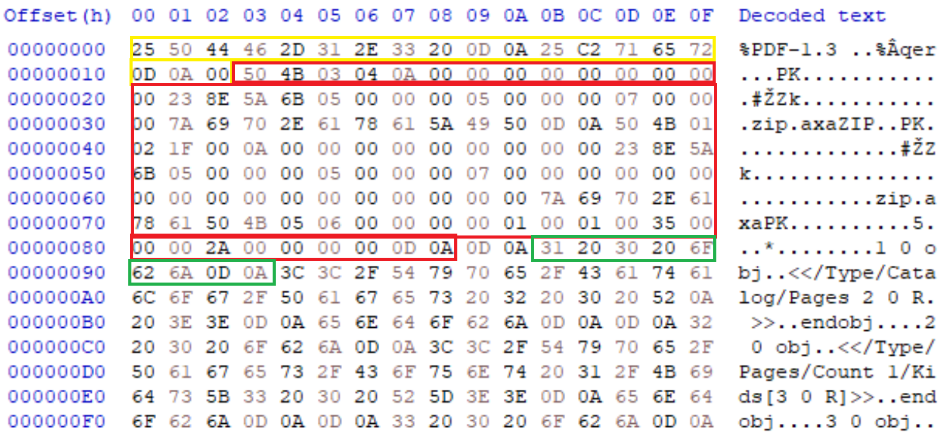

IMAGE 2 – Graphic, parasite hiding as part of an organism, avoiding detection

A more advanced example of this would be using legitimate components of a file to create an active and custom unused space in a file, such as creating an XObject in PDF. In a PDF, you can specify/create an XObject, which is not referenced anywhere in the document and, as such, is not rendered or processed on opening. The XObject can be populated with a stream, which can be made up of the contents of a sub-type file. One concerning implementation of this is to embed encrypted file containers into PDF[9]; by adapting the container to use the PDF header block as the SALT and ensuring the verification block is at offset 0x40, the container can be embedded in the XObject stream at the start of the document without corrupting the PDF. As the XObject is not referenced it is not rendered/processed but is a legitimate component of the PDF specification and structure. As such, the file can be opened as both a PDF and decrypted to reveal a hidden encrypted directory/archive.

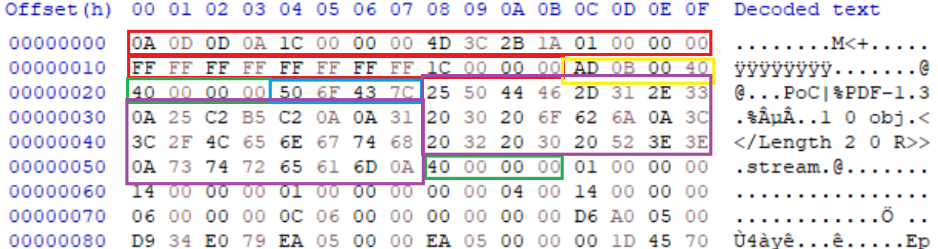

Zipper

Zippers are a much more complex version of parasites where the data of both the primary and sub-type files are embedded in the comment sections of each other. Rather than there being a primary file type and a sub-type, zippers work by using differing comment specifier values to nest the data blocks of each file within the comment blocks of the other (hence zipper). This is typically reserved for script files which can be interpreted by multiple engines and where the multi-line comment blocks can encapsulate the other script versions without needing to handle header locations or requires structural elements.

IMAGE 3 – Representation of two items being enfolded into one another

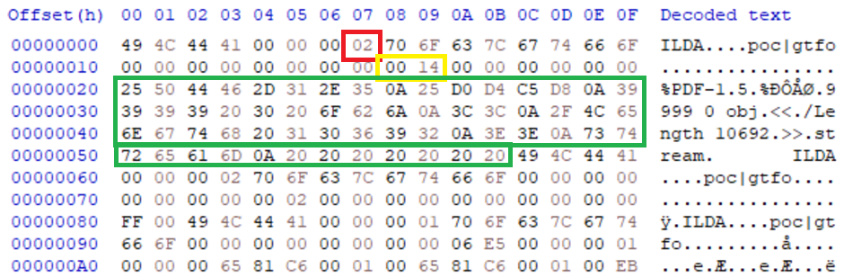

Cavities

Cavity-based polyglots work on embedding sub-type files into unprocessed memory space within a file structure. As an example, in executable files, null padded space can be referred to as a cavity or code cave which can house arbitrary data. However, the same principal can apply to other file types. Any space within a file’s memory space which can be populated with arbitrary data and is overlooked by an interpreter of the primary file type can be considered a cavity for polyglot injection purposes.

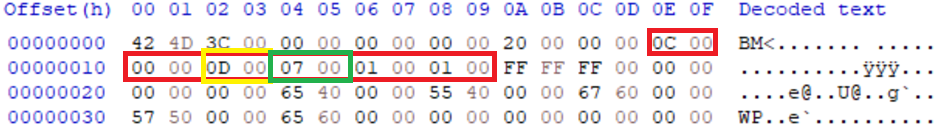

IMAGE 4 – Representation of cavity, commonly called “code caves” which can be used

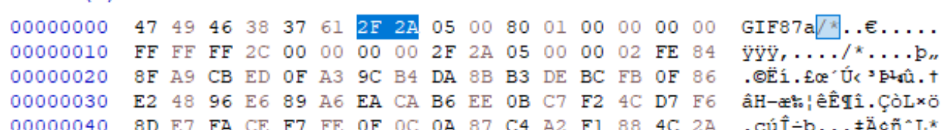

Sample collection

Several databases of polyglot samples are available for free online and can be a good basis for initial sample collection. However, the samples will need to be analysed to determine their polyglot type variation as well as assessing whether they are legitimate (accessible in all formats without extraction methods required for sub-type access) polyglots.

The sources used for initial sample collection are:

- Source 1 - Tweetable polyglot png

- Source 2 - Polyglots database

- Source 3 - Polydet

- Source 4 - Polyshell [Zipper sample script]

- Source 5 - WEBP with MBR bootable segments

Sample generation - automated

The samples which were within the sample sets and databases freely available were not exhaustive or terribly reliable in being true polyglots. Therefore, we are required to turn to sample generation via available polyglot creation tools or manual adaptation/creation of samples.

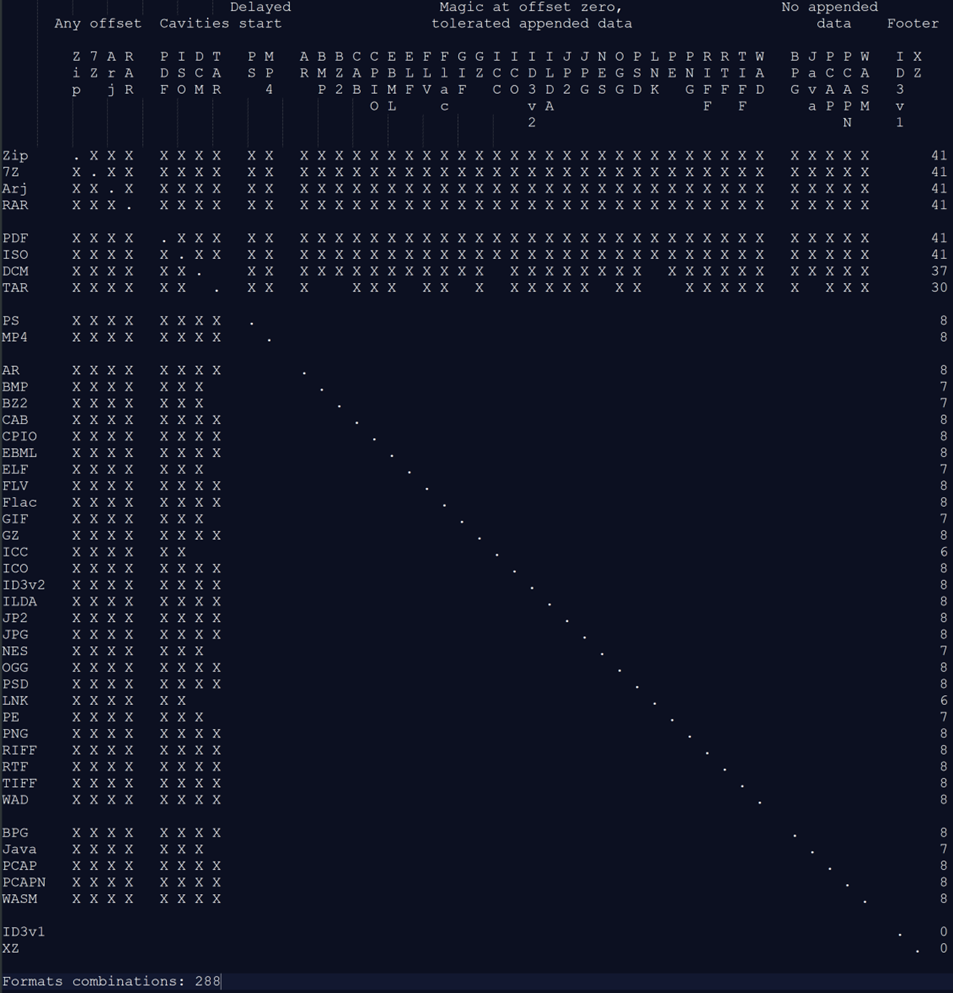

Mitra

This is one of the primary tools used to generate polyglots which are both true polyglots as well as mock or “near” polyglots. “Near” polyglots allow for overwriting of components of the primary file with the original content being encoded in other sections when the primary and sub-type begin at the same offset and, as such, are not considered true polyglots for what we are after. Mock polyglots are designed to insert the identifying markers of various file types (as many as can be supported by the primary) to help create detectable polyglots. However, mock files do not consider the practicality of the sub-type file data embedding and only account for the header identifier, so are also not useful for our purposes.

This tool is good for handling some of the binary formats and will attempt to embed them into parasites and zips where possible but will fall back to stacks by default. If a file type cannot be determined by the tool’s parser, you can force polyglot the files which essentially treats the sub-type as a data blob and appends/stacks it to the file.

IMAGE 5 – Mitra tools combination table for polyglot creation

PowerGlot

PowerGlot is a tool which can be used to a limited degree to embed script payloads into PDF and JPEG images. This has a limited use case but highlights how this can be achieved automatically.

Metasploit bmp-polyglot generator

Metasploit has an encoder module to embed shellcode payloads into bmp files. This can be used to generate, transfer and then deploy malicious shellcode to targeted endpoints. The generator script is in ruby; however, it appears to require a Metasploit agent on the endpoint to interpret and extract the shellcode for local memory injection. This may not technically constitute a functional polyglot such as those we are looking for; however, the embedding technique and location may be used for other file types (depending on interpreter readability).

Manual sample creation and vulnerable segment identification

This section will outline how to generate polyglots for various formats in a manual way, this will focus on parasite and where possible zipper and cavity methods. Stack polyglots only require the appending of the sub-type file to the primary file type and so will not be covered in detail. For each primary file type I will, over the course of this research, identify where sub-types can be supported and provide sample structures (images) to demonstrate their utility.

IMAGES

Universal technique – Pixel data injection

The simplest method an attacker has when dealing with image types is to inject the sub-type file data directly into the image data itself. This can be achieved extremely easily but results in corruption of the image data with clear evidence of suspicious content to a human user or even potentially statistical analysis. As the sub-type file is not injected into ancillary or non-functional elements, it is arguable if this is a polyglot method. However, if both file types can be opened, it may be moot from an offensive perspective depending on the attack vector.

If the image is intended to be viewed by end users, pixel corruption may be a constraining factor. However, if the polyglot primary type being an image is to bypass type limited restrictions to deploy a sub-type file to a restricted area, the image data corruption is irrelevant. For example, a web portal which allows public file uploading of a specific image file type, which are then hosted on the server, is a prime target for this. By creating a polyglot to match the primary type of the image format required, an attacker could upload sub-type script files such as PHP or a PDF file to be opened on the server via we- based attacks which alter the type determination of the hosted file (or bypass the extension of the file) to achieve malicious payload execution. If this is the case, detection of such sub-types would require the data block would need to be checked for indicators of secondary file structures such as PDF, script blocks and even things like ISO.

IMAGE 6 – BMP with PDF injected in image data, resulting in pixel corruption along bottom of image

Mitigation of this type of sub-type injection is problematic to resolve due to the key CDR requirements to retain semantic/visual integrity of the file post-processing. Traditional parasites are confined to specification-defined elements with data types and sizes, which can be leveraged for injection, but injection into the pixel data itself means we cannot simply remove the stored data as we would with other segments. Instead, we must either scan the contents of the pixel data for identifying markers or employ image-wide pixel alterations to achieve destruction of the sub-type document. In regards to the latter method, Glasswall has developed a steganography mitigation system which is tailored to achieve this result[13]. The images below demonstrate the results of this system on the BMP image above in achieving sub-type PDF file destruction.

IMAGE 7 – BMP image data with PDF injected into pixel data stream

IMAGE 8 – BMP image with PDF pixel data embedding, cleaned after steganography mitigation

Universal Technique – Palette data injection

In simple images, such as logos, the image may be stored as a palette image instead of a TrueColour image. In TrueColour images such as Image 6, each pixel has typically 3 bytes assigned to it which represent the RGB channels which determine its ultimate colour. In palette images each pixel instead only contains 1 byte which is an index value of the colour palette. Standard palettes have a maximum size of 255 colours which aligns to the 1 byte index value, meaning the image only has a colour range of 255 colours. There are images which support larger palette sizes but the concept remains the same, by having a pre-et range of colours rather than a dynamic per-pixel value the required size of the image file is reduced but equally so is the colour depth of the image.

When creating polyglots, akin to direct pixel data injection, malicious authors can inject content directly into the palettes of images to store sub-type content. Depending on the size of the palette, this can support script content injection or even potentially complex sub-types also such as PDF. By altering the palette data, the pixels which are indexed to the altered entries will be affected making visual detection of corruption by a human user extremely likely. However, as with direct pixel injection if the attacker does not intend for the file to be viewed by a human this visual corruption may not be an issue.

As an example lets use a PNG file using a 255 byte palette, from section 11.2.3 of the PNG specification this requires a PLTE chunk addition made up of 3 byte entries for RGB. By creating a cover PNG image we can find the PLTE entry in the files structure.

IMAGE 9 – PNG file using colour palette PLTE(Red) entry values(Green)

If we change the palette data contents to contain a malicious PHP script, the rendered image is affected but not corrupted. As the palette contents are accepted as long as the palette entries are the correct size (number of entries x 3), manipulation of the file in this way does not invalidate the file or the visual components.

IMAGE 10 – PNG with PHP(Purple) injected into PLTE(Red) palette(Green)

This example has injected a PHP script which will attempt to read a file and dump its contents. At the beginning of the script we use a multi-line comment end specifier to link with a previously injected multi-line comment start injected earlier in the file to ensure the script runs as intended. Mitigating this type of injection has the same problems highlighted for pixel data injection, but it also has the same solution. Glasswall’s steganography mitigation tool can destroy these types of polyglots in palettes by employing palette shuffling which randomises the palette colour entries. By relocating each palette defined colour to a new index, this corrupts the required UTF-8 character sequence for the PHP script in this example but would also corrupt the file structure of any sub-type file in the images palette. Below, Image 11 demonstrates this effect when the malicious file has been run through the Glasswall CDR process including the steganography mitigation via palette shuffling.

IMAGE 11 – Palette PLTE(Red) chunk in PNG image after CDR and palette shuffling(green)

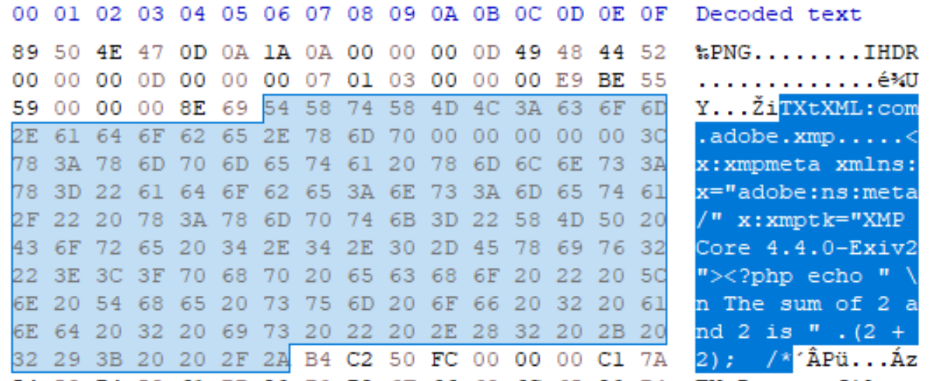

PNG

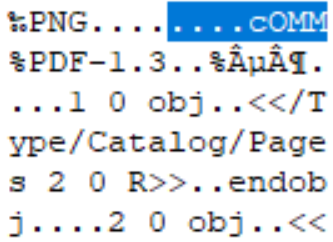

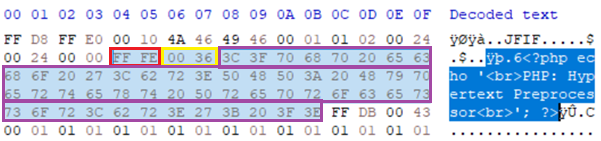

tEXt or iTXt

Sections 11.3.4.3 and 11.3.4.5 of the PNG specification outlines the uncompressed ancillary text chunks which can be used to store arbitrary textual information. Depending on the keyword, the text can be used to achieve ancillary processing based on specific rendering software. Regarding keyword “comment” for example, the textual information is limited to the maximum chunk size of the file and must be ISO-8859-1 character set compatible for tEXt and ISO 10646-1 for iTXt but otherwise permits any content. The tEXt or iTXt chunk is not actively interpreted by most image rendering systems and is ignored, with the focus instead being on IDAT chunks. As these chunks can be embedded anywhere within the file, although recommended outside the IDAT block, they can be used to embedded at the start or end of a document a sub-type file based on the sub-type’s requirements (header offset etc.).

IMAGE 12 – tEXt chunk definition at start and end of document, PHP payload with image data encapsulated in multi-line comment sequence

From the example above, we have embedded a PHP script in the PNG file under two different tEXt chunks. The first chunk is located just after the IHDR section and ends with the multi-line comment start symbol “/*”. The second comment is located just before the IDAT block and consists of the multi-line comment end symbol “*/” and the closing of the PHP block. Essentially, this achieves the commenting out of all the other binary and metadata of the primary image file, which can potentially cause PHP interpreter issues. Running this file results in the correct echo printout using php.exe with only minor errors after the execution of the block we specified. It is worth noting that in most cases with script injection the interpreter will throw exceptions, however if the primary action/purpose is achieved, the script deployment can be deemed a success.

custom chunk (cOMM)

From section 5.4 we are provided a comprehensive outline of the primary and ancillary chunk specifications. From this, we can see that ancillary chunks are essentially ignored as standard by PNG rendering systems and are instead processed only by specific rendering systems, essentially making them unrestricted metadata chunks. Due to this, we can add any ancillary chunk we like with any name if it adheres to the requirements outlined in section 5.4 for chunk naming. As such, we now have the capability to add any ancillary chunk, under any name, to any section of the PNG file we like with custom content. Section 14 of the specification outlines the limitations of custom ancillary chunk locations which are, in summary, not to be placed in a location corrupting IHDR data or between IDAT chunks.

IMAGE 13 – Custom chunk with keyword “cOMM”

In the example above, we can see the addition of the “cOMM” object at the start of the PNG document before the IHDR, which is populated with 508 bytes which makes up the sub-type PDF document. As the ancillary block is ignored by the general interpreter, the PNG still renders correctly and when opened in Adobe the sub-type PDF document is displayed correctly achieving a true parasite polyglot file.

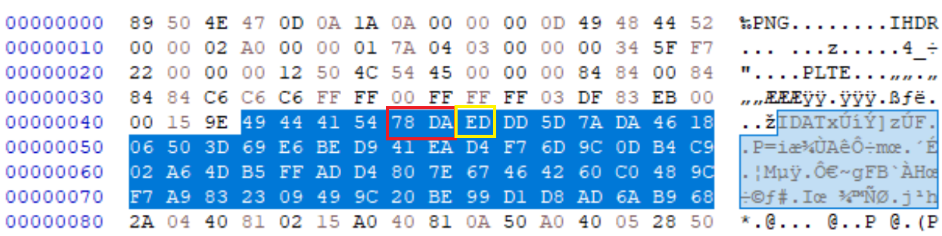

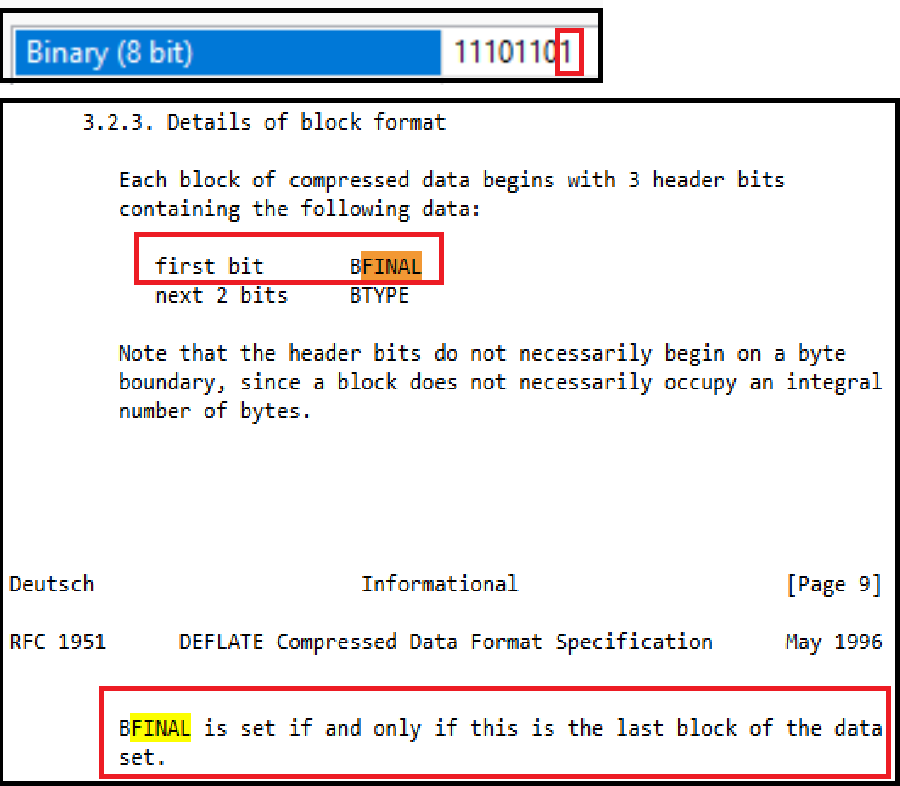

IDAT compress data padding

Research by Dabid Buchanan[1] revealed a quirk in the way that PNG interpreters handle the compressed stream (deflate compression) within the IDAT chunks of PNG images. This is because, due to the use of Huffman deflate coding, the size of the decoded blocks is not determinable by the external chunk specifiers and can only be determined during decoding. As the decoding operation processes the IDAT chunk contents until the decode operations reaches a block with flag “last block” (BFINAL – RFC-1951) set and its subsequent End Of Block (EOB) marker (256 – RFC-1951), without decoding, it is not possible to find these flags. As such, IDAT blocks containing deflate encoded blocks can be appended to without corrupting the image allowing injection of sub-type file content into its legitimate structures with the only change needed to be the chunk size specifier to incorporate the new data. The image will still render correctly, and the injected data can only be identified as such during an analytical decompression process, which would check if the whole chunk is decoded or not.

IMAGE 14 – IDAT chunk with zlib header 0x78DA (Red), followed by decode block header 0xED (Yellow)

IMAGE 15 – zlib decode block header 0xED binary value, highlighting set of BFINAL in first block

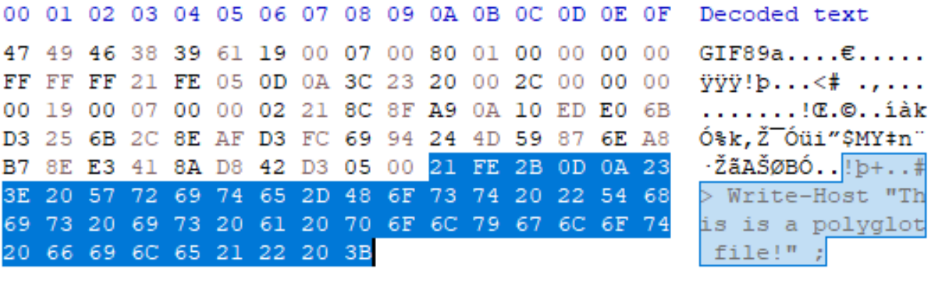

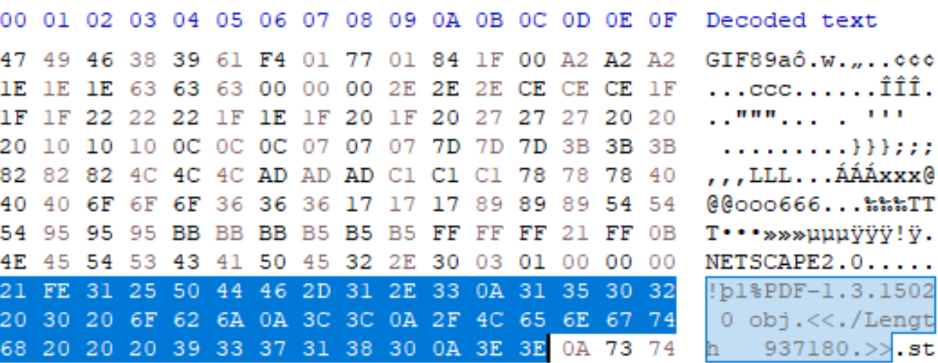

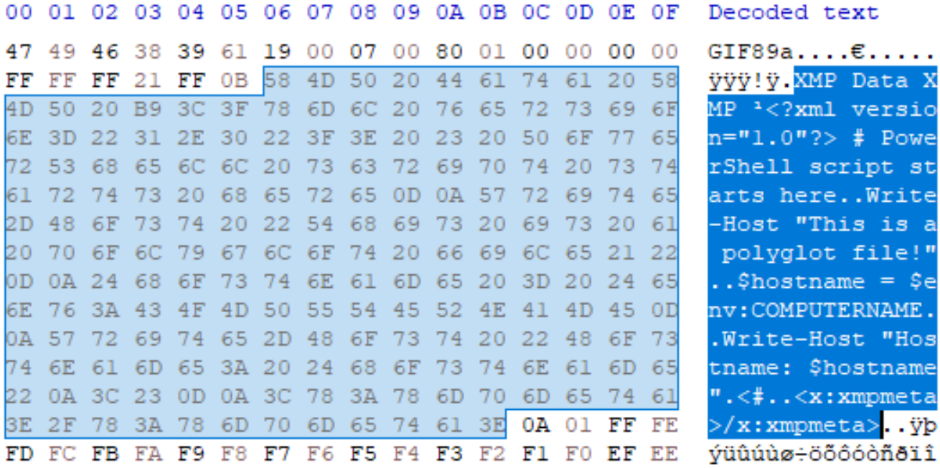

GIF

Comment section

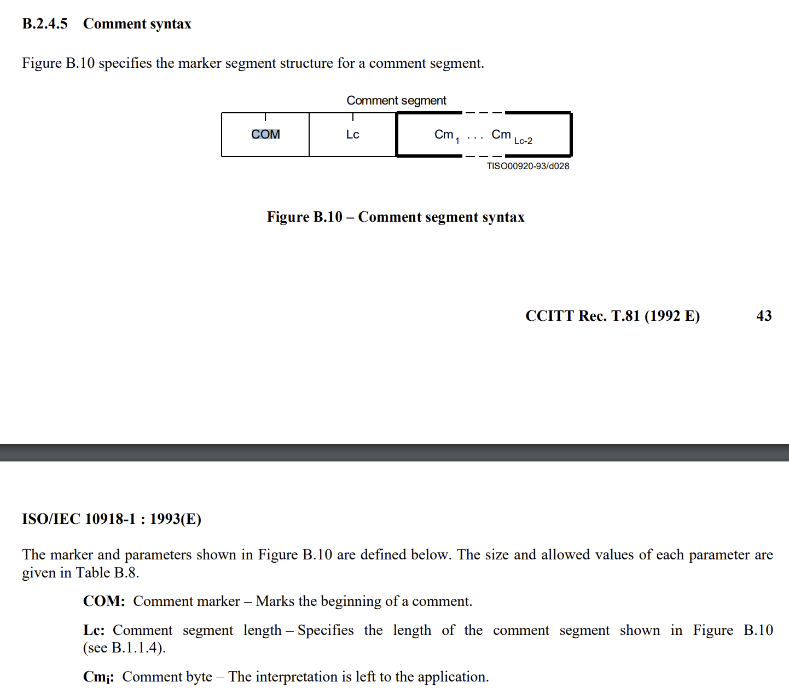

GIF supports a comment segment as outlined in GIF specification section 24. The requirement for such a segment is that it has the extension introducer field 0x21, the comment label (tag) value 0xFE, the comment section size specifier and finally the comment data. This looks like:

“21 FE <size in hex, 2 bytes> <comment data in sub-blocks of 255 bytes encoded as ASCII 7-bit>”

IMAGE 16 – GIF comment block “21 FE” with PS1 script file sub-type

The data must be in 7-bit ASCII and is not to be used for controller information for custom processing. Of the specification, there are two primary points of interest for use for introducing polyglots which are:

“This block is OPTIONAL; any number of them may appear in the Data Stream.”

This indicates that there can be multiple comment sections in one GIF file. This can be important for handling script file injections where binary data may need omitting (see Image 9). The second important part is:

“Position - This block may appear at any point in the Data Stream at which a block can begin; however, it is recommended that Comment Extensions do not interfere with Control or Data blocks; they should be located at the beginning or at the end of the Data Stream to the extent possible.”

This outlines that these blocks, which are ignored by decoders/interpreters, can appear at any point in the document. It recommended that they appear outside the block of the data stream but not a requirement. This means that they can be injected at any point in the files structure making them harder to find and capable of being used for more advanced parasite polyglot injection (PDF XObject scattering e.g.).

IMAGE 17 – GIF with comment segment “21 FE” with PDF header content sub-type

Logical screen descriptor manipulation

The Logical Screen Descriptor (LSD) of a GIF file specifies information about the image pixel size, colour tables and other such information. As with other formats, malicious actors can manipulate this to inject character sequences such as script block comment segments. In the example below, the logical screen descriptor width is altered to “0x2F2A” which in UTF-8 is “/*”, this is the multi-line comment operator for JavaScript.

IMAGE 18 – GIF LSD width size alteration to achieve command block char injection for JS

In this case, the image files data/pixel information was modified so that the LSD aligned with the stored image information. As such, the LSD is legitimate relative to the image data and is not considered corrupt or malformed. Using this method, an actor would be able to run this image as a JS script file and comment out the data content of the image file leaving only the JS content to be executed whilst retaining a legitimate GIF image file structure.

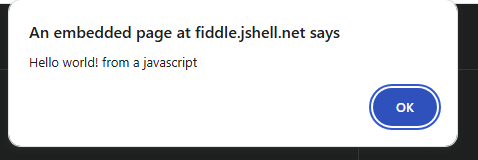

IMAGE 19 – GIF executing as a JavaScript in fiddle

JPG

JFIF segment (metadata)

JFIF is the JPEG File Interchange Format which can be used to store metadata about JPEG files as part of the APP segment structure. This data can include thumbnail data as well as, potentially, a JFIF Extension (JFXX) segment or extension data which can be used to hide arbitrary data. As these elements are used to store secondary information, which is not a core requirement for the image itself but instead normally for vendor or platform specific handling, as it allows for manipulation/injection whilst retaining the images integrity.

Due to the arbitrary nature of this embedded metadata element in most rendering systems at the max size of 0xFFFF, removal should be considered a standard operation toc mitigate risks here for polyglot injection.

IMAGE 20 – JFIF content in APP0 segment

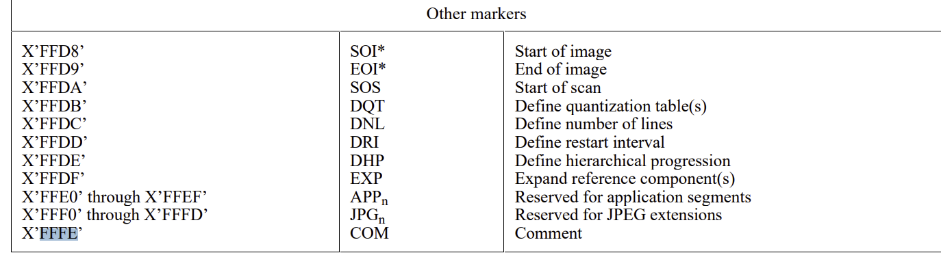

COM segment (comment)

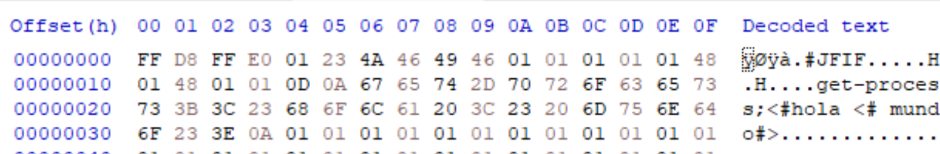

Within the JPEG specification ISO-IEC-10918-1 under section B.2.4.5, the comment syntax outlines the configuration of the comment segment, which can exist within a JPEG file structure.

IMAGE 21 – JPEG specification B.2.4.5 for comment segment configuration

Specification adherent comment sections start with segment identifier “0xFFFE”, which is specified in the marker code assignment table B.1.

IMAGE 22– JPEG specification table B.1 outlining segment marker values

The specification does not outline how many comments the document type can support/allow or where they are located within the document. This means that, in practice, these comment segments can be injected anywhere between data/essential segments of the file permitting their use for polyglot parasite creation. As these can be injected at the bottom, in the middle or even very close to the beginning of the document, this supports most file types which have non-zero offset header requirements such as PDF, script payloads blocks, compressed file types and even ISO/executables. The example below demonstrates a PHP script injection within JPEG which allows the file to be opened as both a JPEG image and executed through a PHP interpreter (such as php.exe):

IMAGE 23 – JPEG COM segment with sub-type PHP script file polyglot injected (Red = COM identifier, Yellow = COM segment size, Purple = COM data)

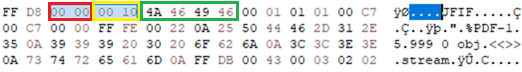

No APP0 JFIF comment

In some examples, JPEG files can be created without APP segments, which are typically reserved for hosting the JFIF or EXIF structures. This is highlighted by the research done by Ange Albertini[2] This is useful as some interpreter for other file types, such as PDF, specifically check the byte sequence of the headers to determine if the file is a PDF or a JPG. In these use cases, the systems check for the JPEG SOI “0XFFD8” followed by a segment identifier “0XFF”. If this pattern is encountered, the interpreter will open it as a JPEG and not a PDF, regardless of whether a PDF header is present in the file.

Due to robustness built into several JPEG interpreters, however, it’s possible to include the JFIF segment without including an APP0 segment specifier. In the example below, the APP0 segment specifier is removed from a file whilst retaining the JFIF content and a comment segment added immediately after the segment containing PDF data. The PDF data specifies a stream start, which effectively means that the contents of the JPEG will be ignored by the PDF interpreter as part of a stream content if rendered as a PDF until it hits the “endstream” keyword. As the JPEG pattern has been avoided and the PDF data is included in a comment segment, the creator avoids the PDF interpreter forcibly interpreting the file as a JPEG.

IMAGE 24 – JPEG file with APP0 segment identifier removed and PDF data contained in COM segment. (Red = Missing APP segment identifier, Yellow = Segment size, Green = JFIF identifier)

It would be expected in this use case, due to the expected nature of the APP segment from its specification, that this JPEG would fail to open as an image. However, as interpreters are built to be robust, they in most cases instead simply assume an APP segment will be present and check for the segment identifier and continue without issue. This allows for a JPEG file to subsequently host a PDF document without running afoul of the PDF interpreter safeguards to prevent this.

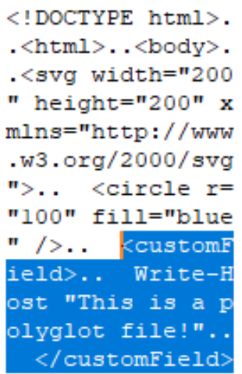

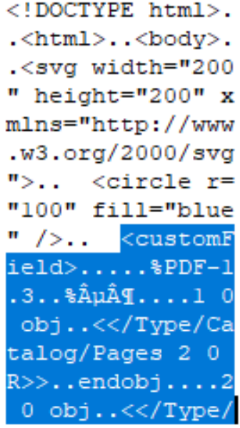

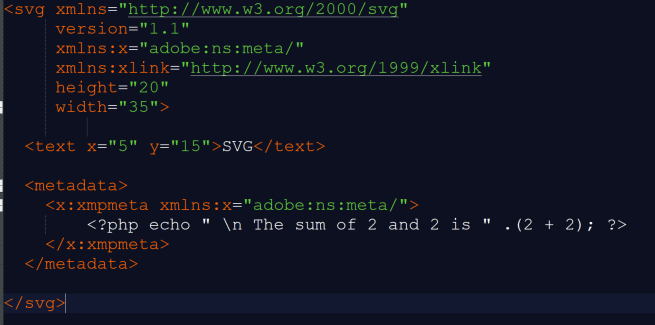

SVG

Ancillary elements (custom, metadata, description)

SVG files are essentially just XML files which are interpreted as images to achieve a functional polyglot from a parasite method we can employ arbitrary content element injection. Elements which can achieve this include expected file type specific elements such as “<metadata>” or “<desc>”. Additionally, data can be injected using custom field elements (in alignment with SVG specification section 5.10 “Foreign namespaces and private data”) or via appropriate commenting in-line with standard XML structure requirements (section 2.5 of XML specification 5th edition). All these methods are legitimate structure components which are permitted by interpreters.

This means that parasite polyglots for SVG are extremely easy to implement as the header for SVG’s is relatively small and simplistic when compared to other file format types. This is demonstrated below with the creation of a custom element “<customField>”, which hosts a PS1 command line. When run as a PowerShell script file it executes successfully:

IMAGE 25 – Custom element injection into SVG image block of HTML file structure

Research by Mauro Gentile at Minded Security conducted in 2015[3] investigated specific use cases where SVG is used to polyglot malicious PDF files to achieve misinterpretation and execute malicious payloads. This was achieved via SVG uploads without CDR or structural verification allowing hosting of PDF files which could subsequently achieve JS execution or file system access via PDF forms. This was duplicated in our test set creation, both using a standalone custom element and mixed and split across the file using custom elements and comment blocks.

IMAGE 26 – SVG with PDF sub-type injection across both custom field and comment block

BMP

From a polyglot construction perspective, BMP is a limiting choice of base file type due to its extremely curated structure and disregard and exclusion of all comment and vendor-specific fields. BMP was conceived and developed by Microsoft, who kept the original design limited to only structural definitions of image data without scope for custom renderer handling using ancillary metadata. Later versions added some metadata support, but large or textual support is not included in their specification. Custom versions of BMP can exist, but they are not covered here. The specification for BMP is bundled under the WMF specification in the Windows documents. [14][15]

From this, we can ascertain that polyglots using a BMP base typically are only viable via the stack creation method due to the strict nature of the specification. However, some complex injection methods can be included with limited viability.

Segment Padding

One of the few ways to achieve parasite polyglots with BMP is to abuse some of the file definitions to create spaces (cavities) in the file, which can potentially be leveraged to store arbitrary content. The interpreter will ultimately ignore the created space as it exists outside the required data size & location and so can contain any data. This is a much more involved process and can rarely be automated.

To do this, the header information is adapted so that gaps are created between the required sections, which most interpreters will ignore. These gaps can be any size relative to other required elements (such as colour tables) and unused space between the original end of the document and the maximum 4-byte value of 4,294,967.295 bytes. This can allow the injection of complex file types such as a PDF.

IMAGE 27 – PDF injected into BMP segment padding (Red = BMP type specifier, Yellow = File size, Green = Offset to image data location, Purple = Image Header, Blue = Padding data between Image Header and data, PDF injected)

Header Injection

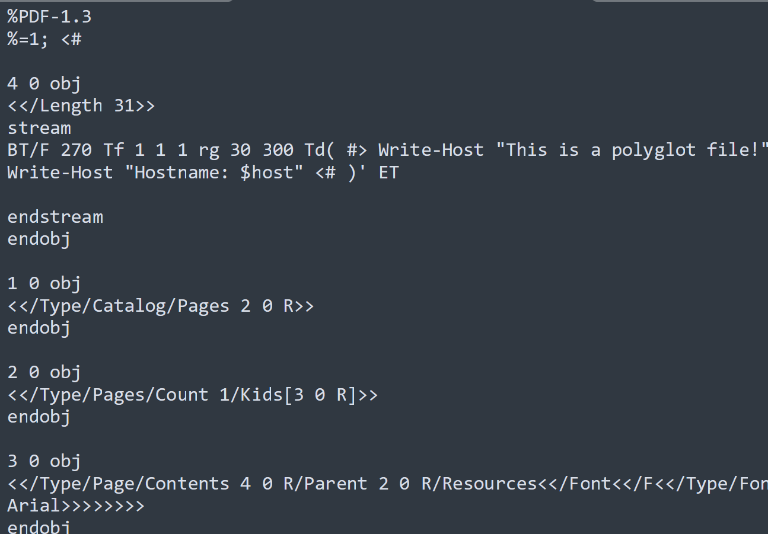

Another curious methodology, outlined by researcher “NuclearFarmboy”[4], leverages how as the header information can be any value we desire, we can manipulate this to include ASCII values of our choosing. This is valuable as it can allow us to inject comment ASCII character sequences to embed script blocks without encountering errors during execution.

In their work, they adapt the BMP file size specifier at offset 0x04 for 4 bytes to a size which corresponds in ASCII to variable definition and the comment characters for python scripts “=4;#”. The python script itself is then appended to the bottom of the file achieving a parasitic stack, where the injected comment sequence allows a script interpreter to successfully ignore the entire image data block by first assigned the value of 4 to variable BM, delimits the line then adds the comment initiator “#”. This results in full execution of the subsequent script at appended to the file whilst retraining the file as a legitimate image file.

IMAGE 28 – Source “NuclearFarmboy” [4] research demonstrating injection of python comment block code “=4;#”

Size overload

This section will focus on injection into BMP version 2 or lower via data segment size overloading and injection. To do this, we must manipulate the image data section at the beginning of the file to later the data segment sizes, which inevitably affects the generated image. In a simple monochrome image, this can be achieved by altering the width and height specifiers in the BITMAPCOREHEADER, which is specified in section 2.2.2.2 of the MS-WMF specification. An identifier that a BMP is version 2 is that it uses the BITMAPCOREHEADER without a BITMAPINFOHEADER object being present.

IMAGE 29 – BMP header with core header pixel width (Yellow) and height (green) highlighted

By understanding the equation used to calculate the image data size, outlined under section 2.2.2.9, we can manipulate the image data size to the one we desire. With monochrome images this is simple as bits per pixel is 1.

Equation 1 = Size in bytes when compression value is BI_RGB. BI_BITFIELDS or BI_CMYK

By doing this, we can extend the data segment to hide our sub-type data. This does amount to image data tampering and will affect the original image. In the example below, we alter the width value from 0x0D00 to 0xE000 and using the equation changes the image data size from 28 Bytes to 196 Bytes. This creates additional space in the image pixel data which we can then populate with our sub-type file, in this case a ZIP file. This corrupts the original image content but creates a specificationadherent BMP file with embedded ZIP, which works as a parasitic polyglot.

IMAGE 30 – Original BMP image

IMAGE 31 – Image data expanded with ZIP data BMP

IMAGE 32 – BMP image data width (Yellow) value altered from 0x0D to 0xE0 to store zip file in image data padding

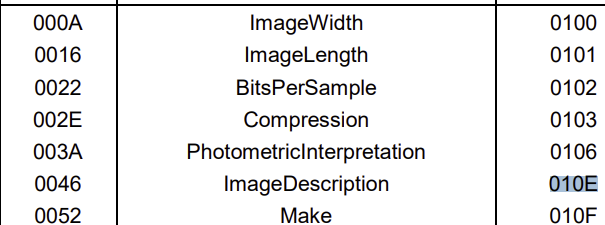

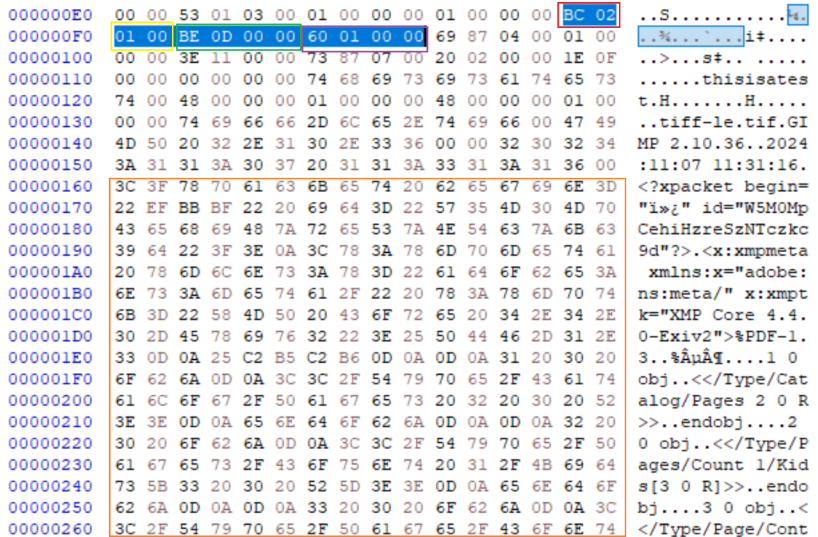

TIFF

UNDEFINED content

TIFF poses a particular challenge when dealing with parasitic polyglots due to its support post TIFF 6.0 for arbitrary field contents with type “UNDEFINED” in the Image File Directory (IFD) (page 16 of TIFF specification). In practice, this allows for an IFD to specify segments of the file which can have arbitrary content which fully supports all sub-type files. Additionally, due to the way TIFF works, these segments can be located anywhere in the document and their associated size and offsets specified only in the IFD.

Due to these factors, it is relatively easy to add sub-type file data to an existing TIFF file either at the end or the document or between existing segments by modifying the IFD. The latter method requires manipulation of the existing IFD entries that have offsets after the injected entry, as their offsets will need to be recalculated based on the injected data size, but this can be achieved to result in a fully integrated polyglot.

In the examples below, we have inserted a PDF to the start of the document and a ZIP file to the end of the document. In the case of the PDF, the IFD entry specifies the undefined data segment to start at byte offset 0x8 and therefore well within the strictures of the PDF header allowance of 1KB.

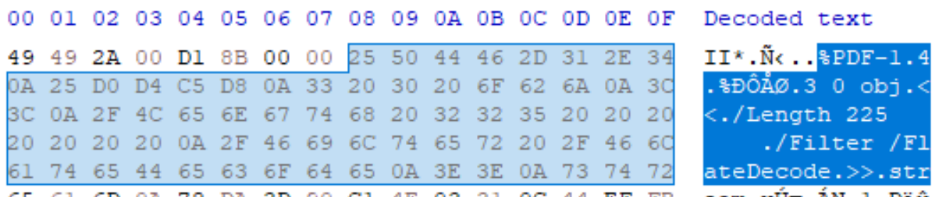

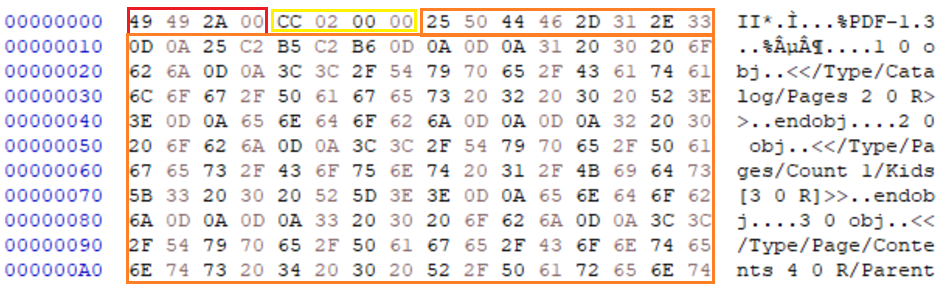

IMAGE 33 – PDF data stored within TIFF structure at offset “0x08”

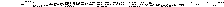

IMAGE 34 – IFD entry showing tag 0xDDDD (Red) which is custom, 0x07 type (Yellow) “undefined”, size 0x8BC9 (Green) segment at offset “0x08” (Purple)

In the case of the ZIP, whereas this would typically result in a stack polyglot, we have added an IFD entry to the end of the document to encapsulate the ZIP file segment, effectively changing a stack polyglot into a parasite by adding an entry to the IFD.

As the EOCD of ZIP files can be within the last 65,547 bytes of the file to account for the potential comment size in the EOCD, there is considerable scope for injection. As such, by adding an undefined segment to the end of the file or anywhere within this memory range, we can insert the EOCD within the defined segment with TIFF EOF padding and still achieve integration of the archive structure.

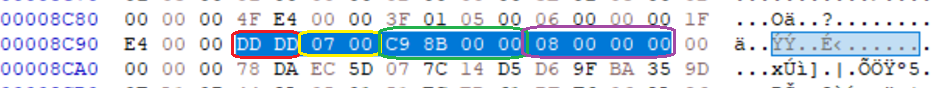

IMAGE 35 – IDF entry showing 07 type “undefined” segment at offset “0x5850” in Little Endian, PK header indicating ZIP sub directory data beginning at offset “0x5850” immediately after IFD in orange

In both cases, as the sub-type file data has been injected within a component of the TIFF IFD structure, they are considered legitimate components. Undefined segments are within specification and are typically used for vendor specific image handling software.

Padding data

As TIFF relies on the IFD to specify image file segments including information on their location (offset) and size, it creates an easy mechanism to create gaps/cavities in the file. As an example, if we change the offset of the last entry in the IFD to increase by 100 bytes and move the contents to this new offset, the 100 bytes introduced between the final and penultimate entry is now a cavity. As a TIFF interpreter only processes elements within defined IFD segments, the gap is ignored and is also not in breach of the specification. This means that we can create any cavity size we want which can be populated with arbitrary data within a TIFF file by manipulating the IFD to facilitate sub-type file injection.

IMAGE 36 – IFD of TIFF file, offset to image data value with tag 0x111 (Cream) is 2 entries from the end. Indicates image data begins at offset 0x20E, creates cavity between header IFD offset and data start of 0x206 bytes.

IMAGE 37 – PDF data inserted immediately after the TIFF header specifying the initial IFD at offset “0x02CC”, in padding space between header and strip data at “0x020E” in orange

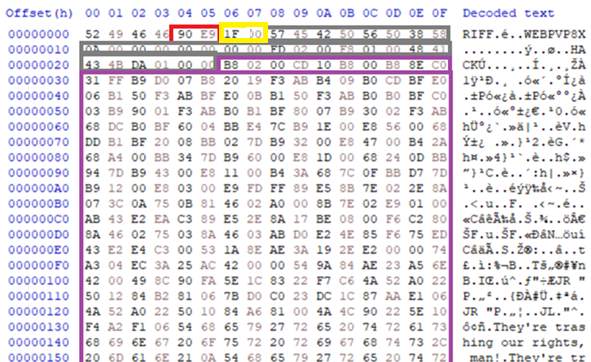

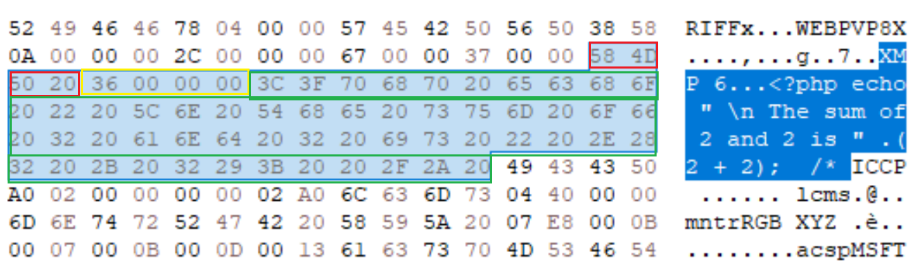

WEBP

Chunk addition

WEBP image files are created in a Resource Interchange File Format (RIFF) which is like a TIFF file construct with chunks/segments of data which refer to each other. Instead of using IFD’s, RIFF’s chunks specify their individual sizes only and the interpreter reads them in sequence using the size specification and chunk identifiers to determine what to do with each chunk and when they end. In the case of WEBP, if we are using an Extended File Format (VP8X) which accounts for the inclusion of fields such as EXIF or vendor specific metadata, we can add “unknown chunk” types. This means that FourCC, or chunk identifiers, outside the core requirements of the WEBP format are permitted when using VP8X. Effectively, this allows the specification of RIFF chunks of vendor-specific ancillary data to be added which are ignored by interpreters and can contain arbitrary data.

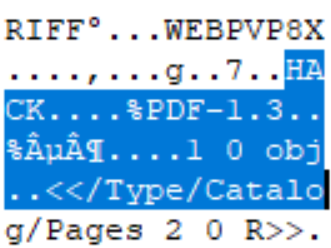

As with all other formats, ancillary data segments allow the injection of any sub-type file we want if that sub-type files header locations requirements are met, and the encoding is within the constraints of the ancillary segment. The use of RIFF chunks in this case allows us to add the ancillary data at any location in the file desired if the defining RIFF chunk (header) is located at offset 0. This means we can use these ancillary chunks to injects both top offset and bottom offset sub-type files such as PDF and archive formats (ZIP). In the example below, as PDF is injected into a WEBP image by adding a “HACK” chunk to the WEBP RIFF structure immediately after the header, succeeding in creating a functional WEBP-PDF polyglot.

IMAGE 38 – WEBP RIFF chunk “HACK” containing PDF sub-type file

OP code and script code injection

WEBP header identifier “RIFF” has the benign opcode translation of:

- R = 52 = push dx

- I = 49 = dec cx

- F = 46 = inc si

- F = 46 = inc si

Researcher Fanda Uchytil[5] going by the handle “H4X” leveraged this benign capability and the customisable nature of the RIFF container size (following 4 bytes) to create a bootable WEBP file or WEBP-MBR polyglot. This is done by adding a “call”, “jump” or “loop” opcode in the RIFF size (opcode = “E8”, “E9” or “E2” respective) and a location specifier immediately following to a RIFF chunk containing the Master Boot Record (MBR) of the hidden payload.

In their documented use case, they decide to leverage the “dec cx” opcode in the header which decreases the cx register (which is a loop counter) by 1. The opcode “E2” specifies “LOOP”[16], which checks the cx register, and if the value is not 0 is jumps to following specifier location. As the cx register will likely be reset in most systems to 0 on load, or in other systems loaded with a random value is not reset, the odds of cx register starting at count 1 are very low.

In the example created for external evaluation, they used a “NOP” 0x90 code and the “JMP” 0x1F[16] operator to simply things. If the MBR signature “0x55AA” is located at byte 510, a boot loader will load the 512 bytes preceding it into memory at 0x7C00. The containing code is then executed, with the JMP code in place the MBR will skip over the WEBP and VP8X chunk and straight to the MBR data stored in the HACK chunk.

IMAGE 39 – MBR inserted into chunk “HACK” within WEBP file. 0x90 “NOP” and 0xE9 “JMP” code (red) inserted as WEBP size to make MBR code block(purple) compatible with RIFF header placement and ignore header (grey)

The exploit is heavily involved but does provide an example of how offensive shellcode can be injected into such a file with actionable consequences such a boot capability.

Another article[6] by the same researcher highlights how the RIFF size parameter can be abused to insert comment specifiers for various script block types akin to Image 27. In one example, they adapt the 4-byte size specifier to “ =’ “ to capture the WEBP and VP8X chunks as string values of variable “RIFF”. This allows the WEBP file to be run as several different script types including RUBY or even Python if the script block has the appropriate termination command within it.

IMAGE 40 – WEBP RIFF size adapter to “ =; “ with RUBY script in chunk “HACK” containing string terminator and appropriate script terminator “exit(0)”

Metadata structures

There are defined structures for supported metadata types, which can be installed within certain file types. These metadata structures allow for more complex metadata inclusion, which is typically important for files which may rely on this data for rendering or post processing image or video files. However, these metadata structures are also commonly supported by office document formats as well.

As these structures are designed for metadata storage, they are tailored towards vendor specific or detail/text data storage, which commonly permits arbitrary data storage with the expectation that the rendering software will handle/validate or ignore the content as appropriate. This loose restriction on content can make these metadata structures a prime target for sub-type polyglot file injection.

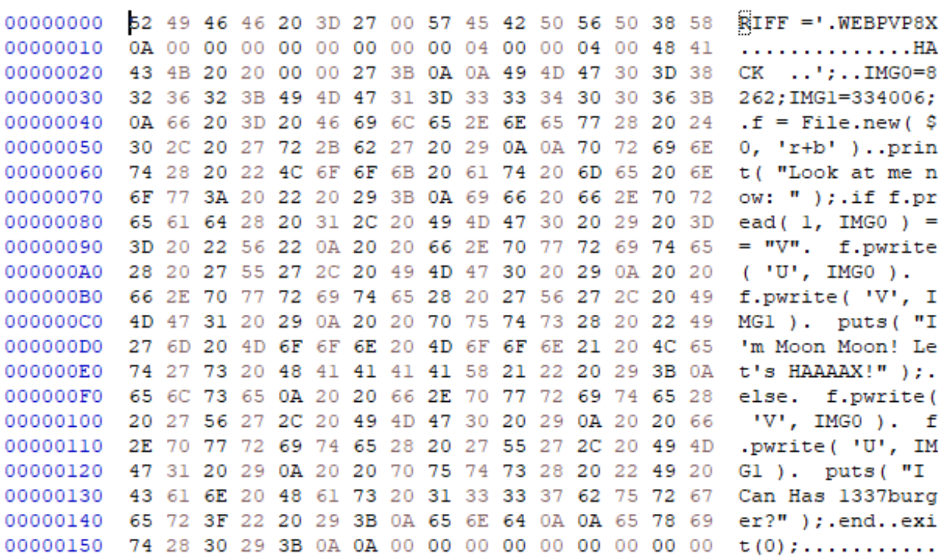

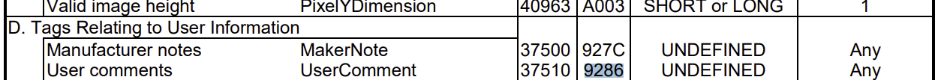

EXIF

Exchangeable image file format (EXIF) is a commonly used metadata format across several file formats, which can be used to store arbitrary data in several formats. As EXIF is essentially a TIFF database, it is relatively easy to alter its contents in a live file without resulting in a corrupt file. As EXIF is largely ignored by interpreters during rendering or supported in a format to specifically store vendor-specific data, custom fields and data entries are expected and overlooked for compatibility reasons, making this a prime target for sub-type file injection.

Ancillary fields

EXIF specification allows for specific data sections to be added to the EXIF structure to be used as metadata, each of which supports different data types. Three of the primary types are “Image Description” outlined in Table 37 of the EXIF specification DC-008 and “MakerNote” or “UserComment” outlined in Table 7. The specification for EXIF is extensive, and so this is not exhaustive. However, the field types outlined are valuable as they allow for arbitrary data content and can be populated to “ANY” size, typically not exceeding 64KB in theory and 1KB in practice.

IMAGE 41 – Table 37 of EXIF specification outlining “ImageDescription” field key

IMAGE 42 – Table 7 of EXIF specification outlining “MakerNote” and “UserComment” field key, content type and size

By using tools such as “exiftool”, the injection of an EXIF into the APP1 data segment of a JPEG image file as an example is trivial and can be populated with any data type we want. As the EXIF segment does not have a size specifier, this means that we can in practice use the tool to create the EXIF segment, find the populated field and the copy and paste any malicious data we would like via tools such as a hex editor. In the example below I have inserted a PDF file into a JPEG via the “UserComment” segment of the EXIF by adapting the TIFF, achieving a parasite polyglot.

IMAGE 43 – Example of EXIF “UserComment” field content being replaced with sub-type polyglot file

Segment injection

As EXIF is a TIFF structure, it introduces all the same risks that a TIFF file permits regarding polyglots such as TIFF IFD manipulation for segment injection or padding. As EXIF is treated as metadata and not actively processed, there is no detrimental effect to the host file if it is a legitimate TIFF structure. As an example, WEBP RIFF format with VP8X allows for EXIF to be added anywhere within the file after the header. As such, the ease of manipulating the EXIF structure creates a prime target for sub-type injection. The WEBP specification also outlines that interpreters should only process the first encountered EXIF chunk, all subsequent chunks are ignored and so have higher impunity.

IMAGE 44 – EXIF chunk added to EOF to host ZIP file successfully

XMP injection

Extensible Metadata Platform (XMP) is a metadata structure developed by Adobe which can be used to store arbitrary metadata within specific file types. Supported images are outlined under section 1.1 of the XMP specification part 3.

Two specific things to note on the format of XMP is that Note 6 under section 6.1 of the XMP specification part 1 outlines that:

“Users of XMP are allowed to freely invent custom metadata”

and section 7.3.3 attests:

“for the x:xmpmeta element, XMP processors may write custom attributes. Unknown attributes shall be ignored when reading.”

From these two points we can assert that XMP allows for fully custom fields to be created within the structure of the XML and that additions to the “xmpmeta” elements in the XMP, which are not of known type, will be ignored but are not outside specification scope and so will not cause corruption/rejection.

Content substitution

As XMP is for metadata storage and expects XML, anything that is not in this format or is not named correctly is ignored. Therefore, XMP can be populated with anything in UTF-8 format and if it is not XML the interpreter just ignores the content entirely. This opens the door to content injection of sub-type file contents effectively anywhere within the XMP structure.

JPEG

JPEG supports XMP as an APP data segment. It is the only segment which can have a repeated ID of APP1, which is used for both XMP and EXIF. This configuration of XMP allows for a segment data size of up to 65.4KB which is more than sufficient for most sub-type files. In the example below, an XMP has been added at the start of the document which hosts a PDF file:

IMAGE 45 – XMP segment hosting PDF sub-type supported file

GIF

GIF XMP injection is achieved via Application Extension additions with the XMP data added as application data sub-blocks. Each sub-block has a format of a size specifier byte and a following data block with a size limit of 255 bytes. As such, every 255 bytes, a new block is required which also required a size specifier. This necessity of a value specifier can cause issued depending on sub-type file injections, which may not tolerate such values. However, in practice, the only requirement is that the sub-block size specifier encountered after each block is not 0, which can be achieved with minor alterations or, in most cases, needs no alteration at all.

Once the file has been injected, the author must find the final sub-blocks size specifier and pad the content to the end of the sub-block to ensure it is conforming to specification. The “magic trailer” required at the end of XMP an Application Extensions is a sub-block closing mechanism and will handle the rest by forcing alignment. In the example below, an XMP is created at the beginning of the file with a PowerShell script, to make sure this executes as expected, a comment chunk is added to the end of the document to achieve commenting of the data block.

IMAGE 46 – XMP chunk injection into GIF hosting PS1 script block working polyglot

PNG

XMP in PNG is installed as a chunk with type “iTXt” using the keyword “XMP:com.adobe.xmp” for identification by parsers. As this follows the same rules as any other chunk, there is a 4-byte length specifier for the chunk (excluding the length, type, and CRC), meaning it can have a maximum size of 4.29MB.

IMAGE 47 – XMP inserted into iTXt chunk containing functional PHP script polyglot

TIFF

TIFF adds XMP as a segment in the primary IFD of the file under the tag value “0x02BC” with a filed type of either undefined or byte, which allows for arbitrary data hosting. The only unique identification of the XMP in this case is the tag value used for the IFD segment entry, which can be searched for. Interpreters use this tag to understand that the content should be treated as XMP XML, if the interpreter does not handle XMP or the content is corrupt, it is ignored by the interpreter.

IMAGE 48 – TIFF IFD entry with tag 0x02BC (in LE) pointing to begin at offset 0x0160 (in LE) containing XMP and embedded PDF sub-type document

WEBP

Adding XMP or EXIF to WEBP is achieved by altering the WEBP format to the extended format “VP8X” and altering the extended file format flags so that bit 6 is assigned a “1”, indicating XMP metadata presence. The metadata is then added as a chunk to the file with the chunk header or fourCC value being “XMP “. The chunk is then populated with the UTF-8 encoded XMP metadata, which is interpreted by appropriate systems or ignored by incompatible systems.

IMAGE 49 – XMP chunk addition at top of file after VP8X chunk, contains active PHP script

SVG

XMP is added under the “metadata” field in the XML structure of SVG files which we know is already an arbitrary data location in this file type. As the XMP is just a standardized extension of the metadata handling using specified fields and having capacity to link out to default field values (title, description), there is no additional context XMP provides for exploitative purposes beyond that of metadata field handling, which is already overly broad.

IMAGE 50 – SVG file with XMP metadata hosting live PHP script block

Document formats

Polyglots using document type files as base formats are more complex due to the wider range of available features and internal structures, which can potentially be exploited for sub-type injection.

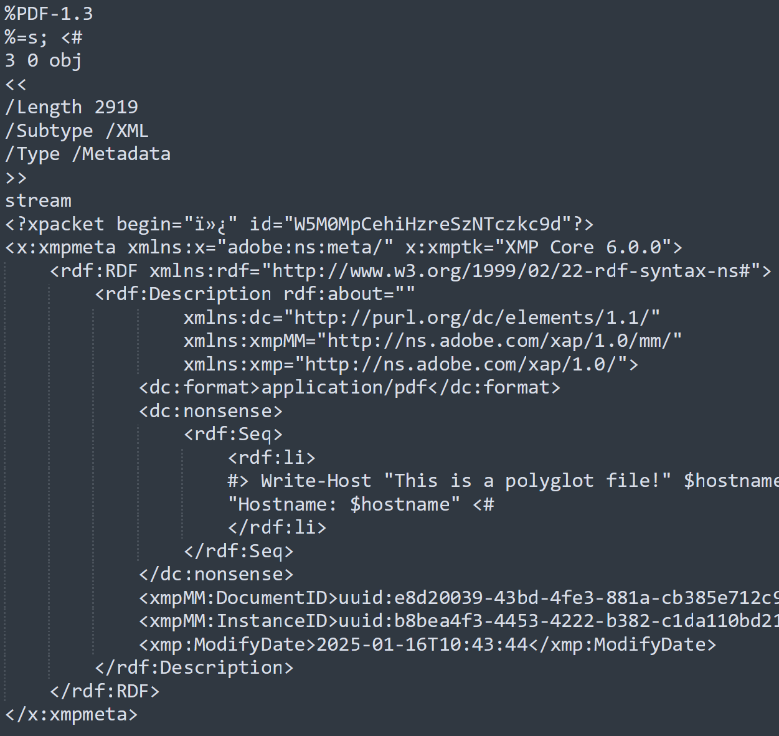

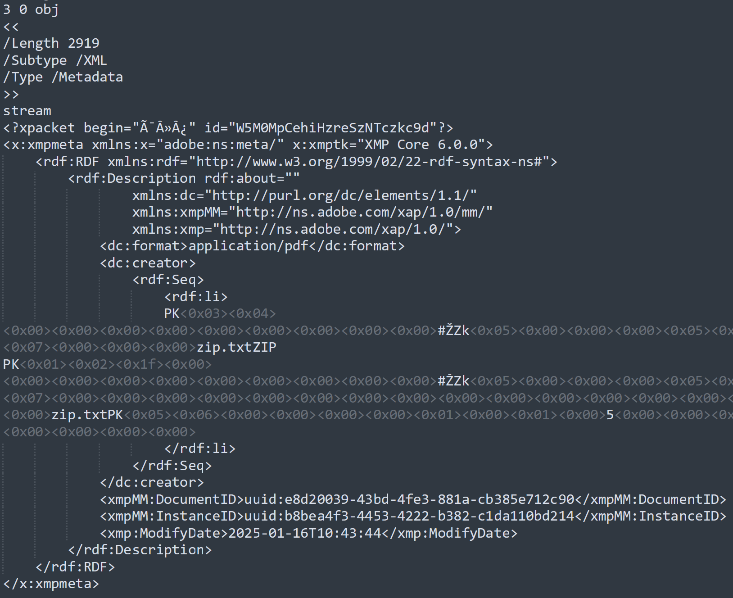

Metadata Stream XML (Default and custom)

Metadata can be added to a PDF document by creating a “Metadata” stream type XObject. This XObject has a type of “Metadata” and subtype of “XML” outlined under section 14.3.2 of the PDF specification. These streams can be attached to the document’s primary structure as additional information or to individual objects. When attached to objects, metadata can be referenced within the document structure making them actionable elements/data which make up a specific functionality in the rendering of the document.

As the metadata content of the stream must only adhere to the XML grammar and XMP specifications, as with other uses of XMP, it allows for arbitrary content injection. There are some keywords which are associated with metadata attributes highlighted in section 14.3.3, which must be avoided, however XMP allows for custom attributes to also be added. This means attackers can add any content they want, if the character set is within the XML/XMP specification limits, and it will be perceived as acceptable metadata.

IMAGE 51 – PDF metadata stream with custom “nonsense” attribute hosting ps1

IMAGE 52 – PDF metadata stream hosting PK zip file within “creator” attribute

Metadata Document Information Dictionary

In older versions of PDF, following section 14.3.3 of the PDF specification, document level metadata could be defined in a document information dictionary. In later versions, this was deprecated for all metadata besides “mod date” and “creation date”. However, it can still be added and interpreted correctly by most rendering systems. Multiple fields in this dictionary take “text string” as the data type again allowing for arbitrary data injection.

IMAGE 53 – PK zip injected into “Author” Information Dictionary

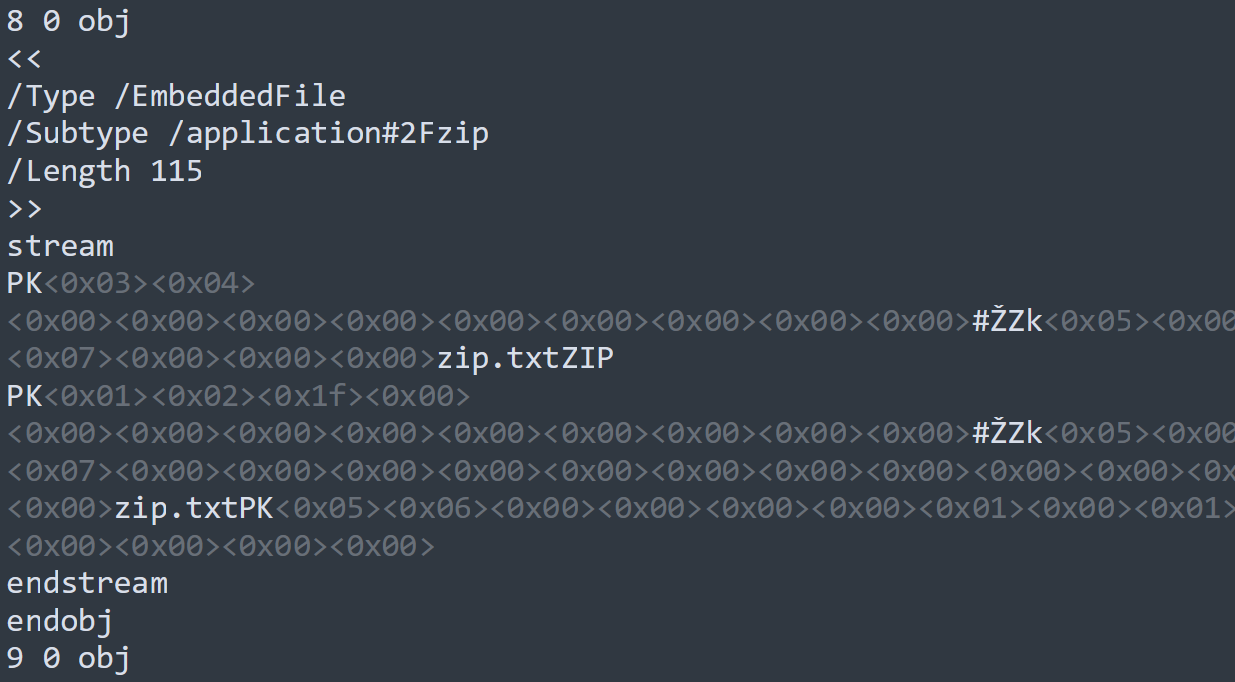

Textual embedding (body opacity 0, page colour match, overlapping)

One of the most logical methods to embed a secondary file in a textual document is to embed the hidden data as part of the content of the document itself. This can be achieved relatively easily in PDF as page content can be overlayed via page pixel reallocation for current printing and then changing the content such as with text via the Tj command operator, to be fully opaque (via saved graphic state) or matching text colour to the page background colour as some examples. Another method is to hide content behind other object via occlusion, such as overlaying, which works by overlapping objects via co-ordinate jump operators (such as Td).

This works on the premise that page content information is context-based as is hard to spot as being malicious from an automated perspective. By occluding the content, the author aims to hide the visual page content from the human user, so they are not aware of the sub-type content and, as such, do not determine something to be amiss. As the content of the page is within the specified definitions of how page content, such as text, can be defined, even if they make no logical sense, they are permitted as being within specification and allow potentially malicious sub-type injection depending on where the page conte XObject resides in the file.

IMAGE 54 – Powershell script block injected into page content text object (BT), aligning text to location (Td) and then altering text colour fill to white (rg)

IMAGE 55 – Zip injected into page content text object (BT), aligning text to location (Td) and then altering text colour fill to white (rg)

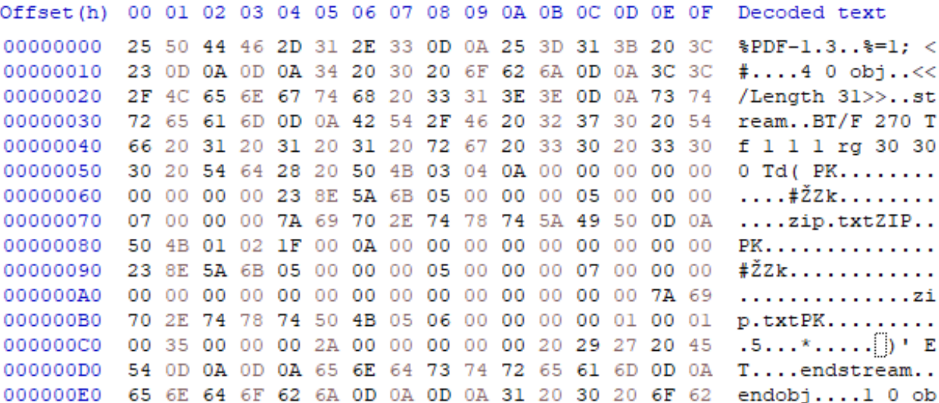

Unfiltered embedded file

Embedded files can allow files with structures which do not require 0 offset or script blocks of exception tolerant languages (or can be paired with comment block character injection) to be injected arbitrarily in the host PDF. The PDF specification section 7.11.4 outlines the requirements for defining embedded files which are included via “EF” or ”EmbeddedFiles” entries. Embedded files general information shows that although a “Length” for the embedded file must be defined as a standard stream parameter, “Filter” (which specifiers compression method), on the other hand, is optional, meaning the embedded file can be inserted without alteration/compression. This allows the embedded file to maintain all is key characteristics, such as Magic Byte identifiers, segment definitions and even clear text content in some formats.

As two easy examples, we can embed both a ZIP file and a TXT file into the PDF host to create effective polyglots, which are both active archive files and executable scripts, respectively. If the ZIP-embedded object is located near the end of the document or within the ZIP archive offset tolerance, it will decompression properly and the PDF will have no observable difference. For the script embedding, as the embedded file content is not parsed by the PDF rendering system, it can contain any characters the author desires, allowing limitless script block injection possibilities. Accounting for embedded script block location and potential exceptions when being interpreter by language engine is a separate issue and may require comment block code injections.

IMAGE 56 – ZIP embedded uncompressed in PDF, creates PDF-ZIP polyglot

IMAGE 57 – TXT embedded uncompressed in PDF; script content can be executed from embedded content clear code with comment block value added to beginning of file. Create PDF-PS1 polyglot

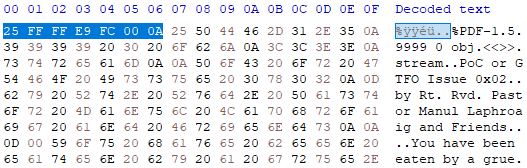

PDF version alteration – MBR injection

One of the more exotic polyglot injection methods previously outlined is that of MBR sub-type injection. This is also available in PDF formats as the PDF header “%PDF-1.“ corresponds to the benign 16-bit OP codes:

IMAGE 58 – “%PDF-1.“ ASCII translated into 16-bit OP code

These are operationally safe op codes, which when executed, will not perform any action which will trigger an error. However, as with all MBR injection it is important to trigger a jump to the relevant section memory section and avoid interacting with the PDF XObject definitions. To do this, the PDF version information is altered to store the required CALL, JMP, LOOP etc. code to achieve the necessary execution pointer movement.

IMAGE 59 – PDF header is present but is missing required version number immediately following

Most interpreters do not require the version information and instead render the document based on the contained features due to specification iterations being additive not regressive (although not always the case). Due to this, the removal of the PDF version does not impact usage of the file.

Duplicate keyword intolerant

Not a polyglot injection method but something that should be made aware of, PDF files which contain multiple instances of keywords, even if they reside within XObject streams, can corrupt the file type determination or parsing process of files. In the example below, the tool “Mitra” has been used on the POC||GTFO version 23 document to inject multiple file signatures and keyword patterns, which can confuse file type determination systems. As a result, one of the injected patterns is a secondary PDF document, which includes its own “trailer keyword”. From this, we can determine that clear-text embedding some file types/contents can potentially hinder polyglot deployment operations or cause the file to be rejected due to error depending on the intended target of the polyglot.

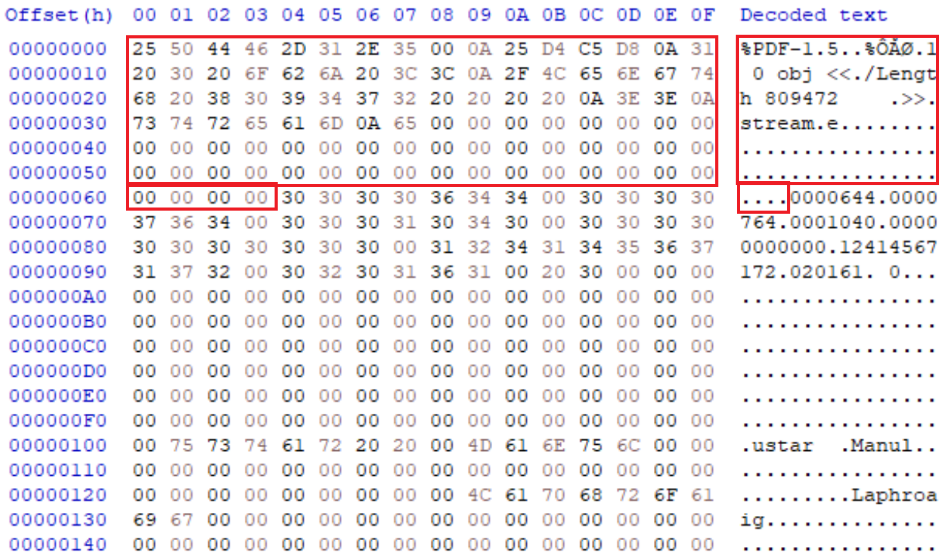

IMAGE 60 – PDF host with secondary PDF keywords and other identifiers injected into XObject stream

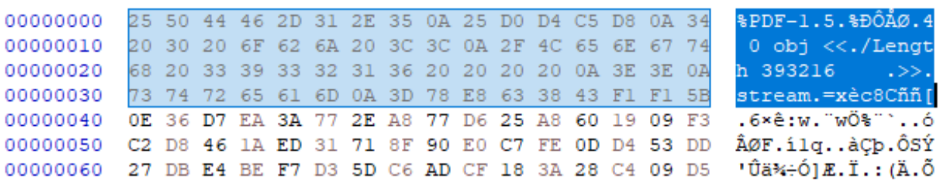

Pre-header data padding

The PDF reference 1.7[7] and prior specifies that the header for PDF files must be located within the first 1024 bytes of the file to be interpreted correctly under section 3.4.1. This stipulation was later removed in ISO 32000-1:2008 specification for the PDF file. However, PDF interpreters still adhere to this allowance due to legacy compatibility. This means that between the official specification and PDF interpreter operations, there is a disparity of 1024 bytes in the header location. This is a prime location for file injections effectively converting the PDF into a sub-type. This allowance is what makes PDF files so capable as a sub-type in polyglots, as the offset tolerance allows for almost any base file types header and structure to be defined before subsequently injecting the PDF content data. With the addition of PDF XObjects being offset based rather than sequentially located, it allows for a complex deployment.

In the example below, an MBR is injected into the 512B of the file by using this header buffer to inject OP codes to perform jumps on boot to an appropriate memory sector. The PDF header is located at offset 0x07.

IMAGE 61 – PDF document with MBR opcode injected into header buffer space

IMAGE 62 – PDF header buffer contents translated into OP code x16 architecture

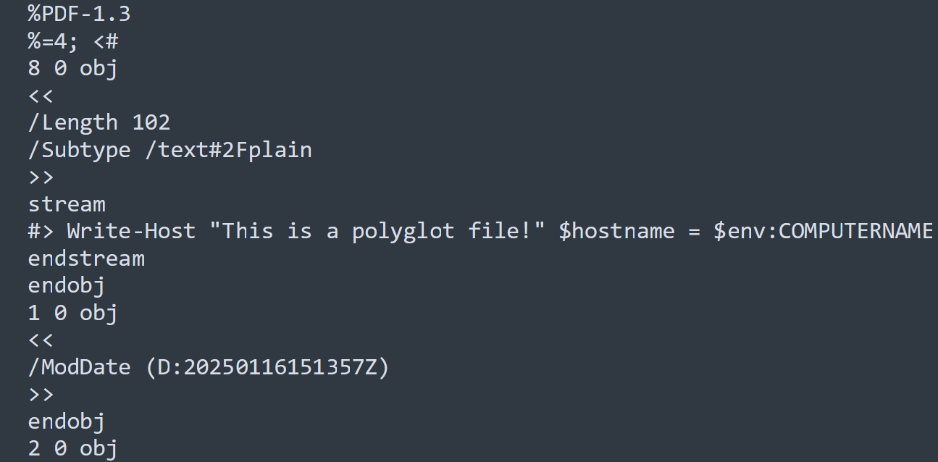

XObject injection with no link/reference

One of the simplest methods to inject into the PDF format is to create an XObject with a length specifier for the stream and then populate the stream with the sub-type file content. As the PDF structure allows as many XObjects as desired, and they do not need to be used/referenced, this permits abuse. As the XREF table can be altered to accommodate these additions post file creation, this is a relatively simple addition. However, the XREF itself can also be completely corrupt and the PDF will still be permitted by most rendering systems due to their robust capabilities.

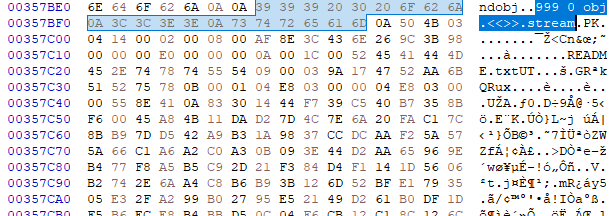

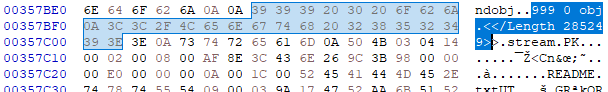

IMAGE 63 – XObject with ID 999, stream contains “PK” ZIP file contents

In the example above, we can see that the PDF file has an XObject with the reference ID 999. This contains a stream which in-turn contains a ZIP files data for the sub-type polyglot. However, the sample was considered corrupt due to the missing “length” XObject dictionary value of the stream. This shows that creation of such polyglots still requires care to avoid rendering system errors. To create a properly formatted XObject, we repaired the file by adding the required length value to its object dictionary.

IMAGE 64 – XObject with corrected length parameter

VeraCrypt/TrueCrypt Encrypted container injection

Encrypted containers, such as those created by TrueCrypt or its successor VeraCrypt, are designed to work as encrypted file systems that hide within the existing file system. The containers are fully encrypted blobs in the file system, which can be decrypted via the appropriate system to access the hidden and secured content. As the containers are themselves file systems, they can contain any type of file within the constraints of the decrypted containers size.

Researcher Ange Albertini published research[9] into how these containers can be injected into PDF file structures. This does not rely on leveraging the header buffer space but instead assumes the PDF header will be located at offset 0. As a decryption tool will also read the file from offset 0, the data from the beginning of the file must be treated as part of the encrypted container and, for a polyglot, also a PDF file. This is achieved as encrypted containers use a SALT, which is a random sequence, and the user’s password to decrypt the header. Decryption works by the password being combined with the SALT, which makes up the first 64 bytes, to create a key to decrypt the header, which starts with the keyword “TRUE” at byte 0x40. If the keyword is found after supplying a password, the system knows the key is correct and the container is decrypted.

By decrypting a container for which the password is known, editing the SALT, and then re-encrypting the container you can edit the SALT of the file to anything. As the only requirement of the SALT is that it is expected to be random, it can be anything. When a container is re-encrypted, it uses the SALT, and the key provided to re-encrypt the contents. I If this is altered, the keys are changed. To this end, the user can change the SALT to look like any file header we want, in this case, a PDF header.

IMAGE 65 – PDF header which, highlighted, is the SALT of the following encrypted container stored in the SALT specified XObject stream

We can perform entropy calculation on the first objects stream of a PDF file to determine the presence of encrypted data. This works as true encryption generates data segments which are indistinguishable from noise/random data to avoid analysis. As such, they will always have high entropy. The container also has a minimum size of 292KB which can be used as a file shard for entropy calculation using either the chi-squared or monte-carlo pi test. Prior research into this type of analysis and encrypted file container detection has been conducted with promising results[8].

This is an extreme edge-case of how polyglots could be introduced into files. However, from the technology, it is possible. The prevalence of such techniques is almost impossible to determine due to the nature of the container and the seeming inconsistency with the requirements (SALT not being random and being embedded in another file). Detection of such polyglots could be implemented relatively simply via entropy checking on the first 292KB of files (if they reach this size limit).

Line comment injection in object dictionary

PDF specification under section 7.2.4 of the 1.7 ISO outlines that in-line comments are permitted by using the control character “%” or “0x25”. When used, the content of the file up until the following end-of-line control character (0x0A) is ignored by the PDF rendering software. This allows for arbitrary injection of content into the file at any point, regardless of component or structure, as the line is completely ignored, to hide sub-type document.

IMAGE 66 – PDF files with comments inserted into XObject structures and as singular entries

Inter object padding

XObjects in PDF documents are specified within the xref table as to their offsets within the document and as such, they can appear anywhere within the file’s memory space. Due to this factor, spaces between the XObjects can be made bigger or smaller at will by appropriately adapting the XREF table to reflect the new offsets of the objects. This means that, by moving XObjects to new locations, we can create unused space/cavities between the existing objects which the rendering system does not process.

This unused space does have limitations which authors must adhere to, this requires the omittance of specific key characters or words. These are things such as “xref” or “trailer”, which a rendering system may detect in the injected space and attempt to process as a key element of the document. However, if these key names/characters are avoided any other content is free to be injected.

IMAGE 67 – PDF with PK ZIP(red) injected into space between header(yellow) and XObject 1(green)

Currently unsupported file formats

PCAP-NG

Custom block injection

PCAP-NG files support custom block[10] insertion, which can contain arbitrary data akin to comment blocks in other formats. The block has a type definition of 0x40000BAD or 0xAD0B0040 in LE, followed by its total block length (4 bytes), an identifier number (4bytes) and then the data content. These custom blocks can be inserted anywhere within the file, allowing for easy creation of parasite polyglots as the PCAP interpreter reads the file in a linear fashion with no termination block allow for both top-down and bottom-up file insertion. In the example below, a custom block is defined immediate after the Section Header Block (the file header), which contains a PDF XObject and stream definition. At the end of the PCAP-NG file another custom block is added, which extends to the end of the file memory which defined the end of the initial XObject and stream and contains the rest of the PDF structure, which permits its successful rendering.

IMAGE 68 – Custom block header (yellow) with 0x40 content size (green) containing PDF header and XObject parameters (purple)

ILDA

Palette injection

ILDA supports colour palettes, which are a set of 3 bytes to make up each entry. By translating a non 0 offset file header or script code into bytes you can inject this into the colour palette if its length is a factor of 3. If the image does not use indexed colours in its other entries, then the colour table has no effect on the resulting output. In the example below, a PDF header and XObject stream is added to the colour palette of the ILDA first entry.

IMAGE 69 – First ILDA entry shows the format code as 0x02 (red), which is a colour palette with the number of records as 0x0014 (yellow) which is 20. Each entry is 3 bytes which allows 60 bytes, which encapsulates the PDF header and XObject (green)

iNes

PRG-ROM injection

The iNes header allows the specification of the PRG-ROM segment in KB blocks. However, you can inject data into this segment which may only be detected as corrupted if loaded into an emulator. As the segment is data content, we can add arbitrary data if this is not attempted to be run. In the case below, a PDF header and XObject stream start are added in the PRG-ROM segment, with the end of the stream added after the end of the following CHR-ROM segment. This means that the entire game data is stored in the XObject stream, and the rest of the PDF is added to the iNes padding data section which is within specification.

As the data is added to one of the game binary segments, it is unknown if the file will load into an appropriate emulator correctly. However, from a file determination standpoint, it is a legitimate iNes file structure.

IMAGE 70 – iNes file with PDF added to the start of PRG-ROM segment

WavPack

WavPack metadata injection

WavPack header permits metadata sub-blocks as part of the header section. These metadata sections allow for arbitrary data insertion to support vendor-specific media handling. The max size of the metadata section is 3 bytes at 0xFFFFFF, which is 16,777,215, however this value is the number of words the metadata occupies, which is 2 bytes meaning the actual limit is 33,554,420 bytes (33.5 MB). This is more than sufficient to host most of the script or non 0 offset header supporting files such as PDF.

IMAGE 71 – WavPack header with metadata sub-block with size 0x1C (green) of words (56 bytes - purple) containing PDF header and XObject stream specifier

TAR

Tar header injection

Tar headers allow for arbitrary names of up to 100 bytes as part of their specification, followed by required structural information such as file mode, size, checksums, content type and others. The header makes up the first 512 bytes of a file, and if a header is below this threshold, it is padded to reach this size. Regarding polyglots, this “file name” field of 100 bytes effectively allows for any local variant ASCII to be injected at the start of the file contents.

As TAR is an offset 0 header location file, this would typically eliminate other top-heavy documents. However, due to the “field name” allowance, this is not the case. If the TAR section contents are appropriately contained within sub-type ancillary blocks (such as comments) top heavy files and TAR files can be converted into a polyglot.

The example below is a TAR file, which adds a PDF header at offset 0 as part of the “file name” section of the TAR header. The “file name” field also allows for the creator to add an XObject specifier, length parameter and stream start keyword, which effectively encapsulates the rest of the TAR content as part of an XObject stream. This XObject is not acted upon/referenced by the rest of the document but is structurally sound according to the PDF specification if an “endstream” keyword is included. As such, the file is both a legitimate base PDF file starting at offset 0 and a legitimate TAR archive file. The same mechanism could be applied to file types such as GIF, which have comment segments by starting a comment block at offset 99 and chaining comments to encapsulate the TAR content blocks.

IMAGE - 72 – TAR file with “field name” space (red) populated with PDF header and XObject specifier

Other type spoofing technologies

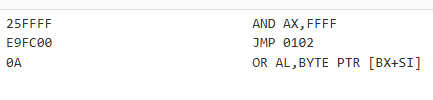

Spoofing learning models by small alterations

Magika[11] is a deep learning model application developed to detect file types based on learned file type layouts. Analysis of the tool shows that it takes a 1KB chunk from the start and end of the document, with capacity for a middle section which is currently unused and feeds these chunks to the deep leaning model created. The models created were done by training against “25M files across more than 100 content types”. However, the files being trained against are likely in expected/specification adherent structures.